New technologies, methodologies and flows will be required to guarantee self-driving cars are safe.

By Kevin Fogarty and Ed Sperling

The race is on to develop ways of testing autonomous vehicles to prove they are safe under most road conditions, but this has turned out to be much more difficult than initially thought.

The autonomous vehicle technology itself is still in various stages of development, with carmakers struggling to fine-tune AI algorithms that can guide robots on wheels through a gauntlet of obstacles and environments. Testing all of that technology, and keeping up with a steady stream of changes, has become a major challenge.

Among the issues:

• Testing needs to be consistent throughout the lifecycle of a component, which means that test vendors need to commit resources to maintaining test equipment for a specific product for specified period of time. That’s a big investment for technology that is still in the early stages of development.

• Chips in autonomous vehicles will need to last for a decade or more across a wide variety of sometimes harsh environmental conditions. So far, however, there are no fully autonomous vehicles on the road, so how they perform over time is entirely based on simulations. Testing schemes are still being developed to include real-world testing plus simulations.

• As faster communications standards are rolled out, testing will move from physical testing of components to a combination of physical and over-the-air testing, which will be required to ensure that communication speeds are sufficient to guarantee safety.

“There are a lot of components with fairly long development cycles,” said Derek Floyd, director of business development for V93000 power, analog and microcontroller solutions at Advantest. “For any new design, you need to test the silicon, validate it, ship it to the customer, and then it undergoes 12 to 18 months of field trials before they actually accept the product. This is one of the reasons they don’t change out everything in the vehicle at the same time. It’s phased in using multiple pieces. But you still have to maintain the test equipment and process for 10 years. So you’re dealing with a lot of components with fairly long development cycles.”

This becomes more complex as vehicles become increasingly autonomous.

“You need to validate the technology to get to Level 5,” Floyd said. “You also have to know which vendor is using which product. And for automotive, you need two or three suppliers for every product.”

Test flows themselves have to change, as well, which will create a major disruption in the supply chain.

“Two things are happening in parallel,” said Anil Bhalla, senior manager of marketing and sales at Astronics Test Systems. “First, with auto test flows you take the normal test flows for semiconductors and add temperatures—cold, hot and ambient—and then you add multiple insertions on quality. The result is that you end up with a very complicated and expensive test flow. Separate from that, some auto parts are moving to lower nodes and advanced packaging, and current testing can’t cover all of that. With a 7nm device using ATPG, you’re only getting roughly 85% coverage. That means there are billions of transistors that are not being tested. If those transistors are in a critical path, that’s a big issue.”

Automotive companies until several years ago were considered late adopters of technology. But as the amount of electronic content in vehicles grows, and automakers make a concerted push toward autonomous driving, that has changed significantly.

“So now you also have to add a flow for system-level test, because you need to get to zero defects,” said Bhalla. “But there is so much momentum here that you’re going to see people start innovating on test. We saw this with the shift from PCs to gaming, and again with mobile. Test flows are definitely part of that.”

New approaches

New technology such as LiDAR also add some new twists to automotive test.

“The challenge with LiDAR is to measure current and power draw,” said David Hall, chief marketer for semiconductors at National Instruments. “With LiDAR, if you integrate power over a long time, heat dissipation is high, so the solution is to use a pulse approach. But as you get a narrower and narrower pulse width, you also get higher peak power.”

He said the newest version of LiDAR are in the sub-microsecond range, which means the scan rate has moved from milliseconds to microseconds.

Much of the technology in autonomous vehicles is still under development, which means there is no way to test reliability by driving millions of miles. But there are questions about the reliability of some of the simulations being performed instead of road testing.

“You can do a lot in simulation before you take that out onto a robot,” said Chris Broaddus, an engineer at GM Cruise. “It may take five minutes versus a half-day in the field. And how are you going to do a regression test in the field? But there is a question about whether if something works in a car, will it work for all cars?”

And will it work with all routes? And how about all kinds of autonomous vehicles? Arne Stoschek, head of autonomous systems at Airbus A3, said one solution may be to sharply limit the routes that autonomous vehicles such as drones can take.

“If you run an autonomous taxi in New York, you have to cover a reasonable area,” Stoschek said. “But that’s an area problem, and it’s a really hard problem. But if you’re using a point-to-point connection, and if it’s just one location in San Francisco or San Jose, that’s much easier. With a point-to-point connection you can choose it for a business case and safety reasons, and you can completely model and simulate that and own it. With any congested area—mega cities with more than 10 million inhabitants—the question is how you can scale that and generate customer acceptance.”

The same principles apply in drones and in cars. While real-world testing is still the gold standard, simulations are much quicker. And they’re becoming much easier to implement. Nvidia, for example, has introduced a a cloud-based simulation service called DRIVE Constellation, which includes a module to simulate a car’s sensors and additional technology to simulate the software stack operating the car. This hardware-in-the-loop platform cycles 30 times per second to ensure the simulated data and simulated software are operating correctly using realistic data to produce a reliable expectation of how well the vehicle would operate on the road.

ANSYS, Siemens, Mobileye, and startups such as Metamoto, have introduced or updated similar services, most of which emphasize testing the function and integration of the system itself. Whether that actually can determine if autonomous vehicles are safe for use on the road is unknown.

Simulation and validation systems are very good at spotting flaws in chipsets or hardware conflicts, and are very good at reducing the number of real-world road-test miles required to be sure all the sensors and control systems in an AV are working together effectively, said Philip Koopman, associate professor of electrical and computer engineering at Carnegie Mellon University, who publishes frequently about his research into safety testing and validation of autonomous vehicles.

This generally complies with U.S. rules governing safety, which primarily focus on mechanical operation of the equipment in the vehicle and how it will respond in a crash. But Federal Motor Vehicle Safety Standards (FMVSS), and standards such as ISO 26262, which covers safety of electronic systems in vehicles, don’t assume the equipment will have any control over the behavior of the vehicle or preventing a crash, Koopman said.

The Department of Transportation is following the lead of car manufacturers on regulation to cover autonomous vehicles, but so far they mainly want exemptions to existing rules – like requiring a steering wheel – that might not apply to autonomous vehicles. The U.S. needs to advance its regulations to cover autonomy in ways that would ensure autonomous vehicles can operate safely, not just that the machinery in them will operate correctly when it’s turned on, Koopman said.

Autonomous vehicles should be required to pass a test that would provide an accurate picture of how safely they would operate on the road, according to a recent publication by Srikanth Saripally, a computer scientist and associate professor of mechanical engineering at Texas A&M University. His research includes development of autonomous vehicles and safety-critical functions such as using LIDAR to detect road signs. Repeatedly comparing how well humans and autonomous handled the same simulated scenarios would provide a good picture of how the road judgment of autonomous vehicles stacked up against humans, Saripalli wrote.

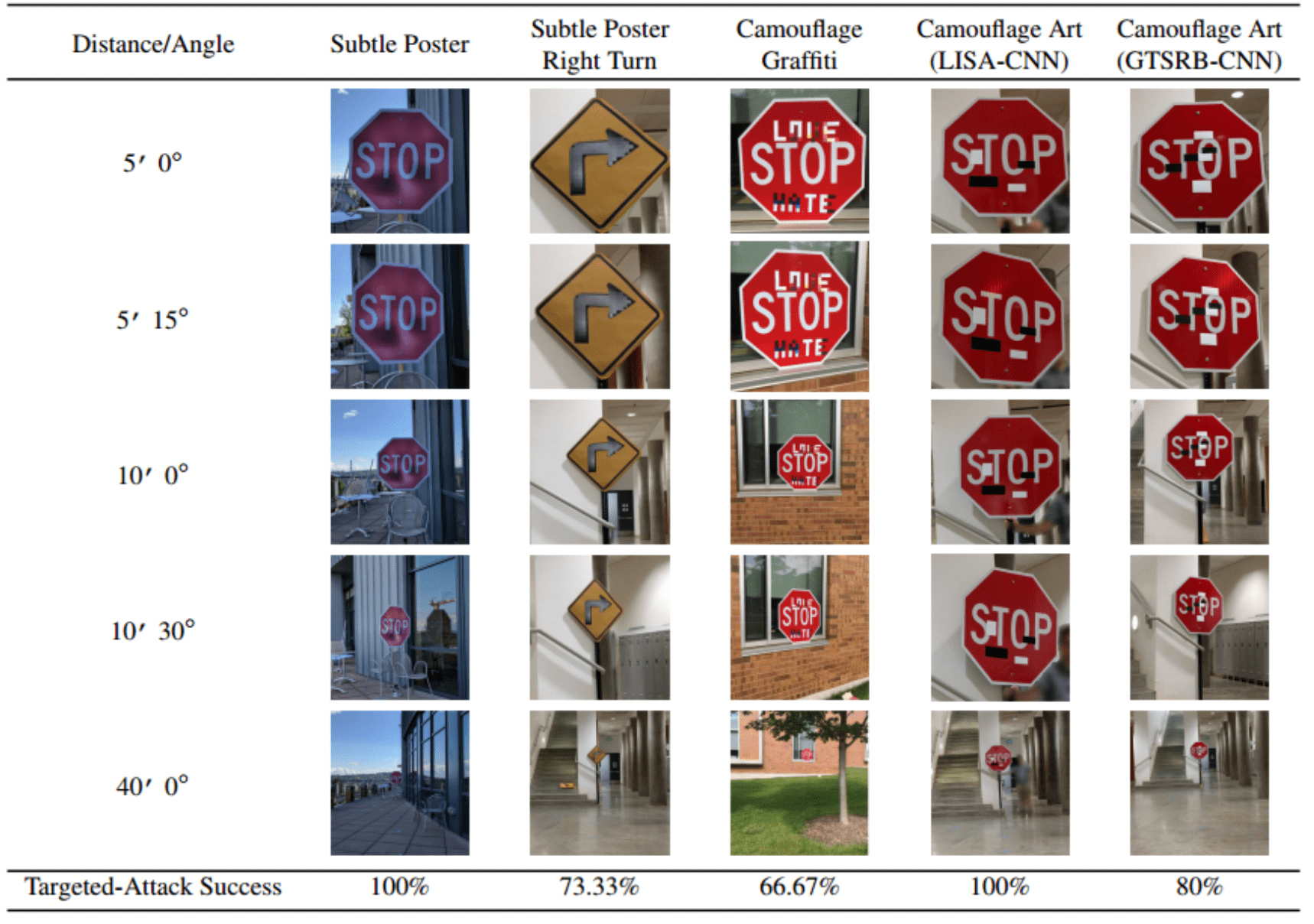

It is hard to prove a negative, so even many repetitions of comparative tests will only show an increasingly probability of how an autonomous vehicles will perform, not provide 100% certainty, according to Atul Prakash, professor of electrical engineering and computer science at the University of Michigan. His research showed how vulnerable autonomous vehicles are to sabotage, even something as simple as adding graffiti that makes a stop sign invisible to AVs.

“If something doesn’t pass a test that’s clearly a problem, but if it does pass, you still don’t know for sure,” Prakash said. “It’s not that the data isn’t useful, but there are a lot of questions, like how does it handle failure situations from a dirty sensor or one that isn’t working properly, software updates – things that are routine but can still be a challenge.”

Fig. 1: Sample of physical adversarial examples against LISA-CNN and GTSRB-CNN. Source: Robust Physical-World Attacks On Deep Learning Visual Classification, by researchers at University of Michigan; University of Washington; University of California, Berkeley; Samung Research America; Stony Brook University.

Street signs are easy targets that could deliver a big impact on unsuspecting AVs using them to negotiate traffic.

The only way to make AVs safe is to define in regulatory terms limitations and requirements that would define how an AV could operate safely, then test the behavior of AVs against that standard, Koopman said.

“Unfortunately, regulators are flying blind,” said Roger Lanctot, director of the automotive connected mobility practice at Strategy Analytics. “They don’t have a point of reference to evaluate these systems. There is some debate about how far you would have to drive a car to generate the algorithmic insight for something that would be certifiable to .999 levels of confidence, and no certification authority is capable of doing that today.”

German technical monitoring authority TÜV SÜD is trying to set some guidelines, but the specifics of what it is certifying and how they relate to the scattered positions of regulatory bodies in the rest of the European Union and the United States are unclear, Lanctot said.

One major effort to create performance standards is the draft ISO 21448 standard, which addresses electronic systems that contribute to safe operation of a vehicle rather than whether or not the electrical system itself operates safely, as existing standards do. If accepted, however, even that set of requirements only cover some aspects of advanced driver assist systems (ADAS), Koopman said, not full autonomy.

Generic testing without guidelines may raise the probability that an AV is safe, but don’t allow for rules that define specific priorities about things an AV should never do, rather than empirical tests that make that behavior less likely but don’t rule it out entirely.

“Simulations that create geometries the car will have to respond to focus on just that instance,” Koopman said. “Even if you could test every possible scenario, it wouldn’t guarantee the software won’t try to kill you on a random Tuesday when it’s raining and there’s a solar eclipse. That wasn’t the purpose of the test.”

Fig. 2: Number of test scenarios required for validation and verification cycles in autonomous vehicles. Source: Mentor, a Siemens Business.

The difficulty of seeing why a machine-learning application does something just makes troubleshooting or prediction more difficult, according to Norman Chang, CTO of ANSYS. “The nature of a deep neural network is that it is like a black box; it’s not easy to see what’s going on inside if you need to fix anything,” Chang said.

There should be a more definitive guideline, but tech providers have no choice but to go with the most prevalent solutions, which is currently to simulate the road miles and generate enough content to test the AVs from every conceivable angle.

There is enough time before Level 4 or Level 5 autonomous vehicles hit the road to develop standards and ways to test them, however, and simulation testing does provide more data that can help refine the ways machine-learning software in AVs make decisions, Chang said.

“In 10 years if you are in a car and no one is driving, you won’t even think about it,” Chang said. “Right now simulation is pushing the envelope of our capability.”

Miles to go before I beep

The assumption that autonomous vehicles need millions of miles of real or virtual testing solidified into common wisdom after it was delineated and quantified in a 2016 Rand Corp. study, whose conclusion that autonomous vehicles would have to be driven millions or billions of miles to demonstrate basic safety to consumers who won’t trust an autonomous vehicle to drive without clear evidence that it is at least 10 times less likely to be involved in a fatal crash than an average human.

To make it work, the Rand report concluded, technology providers would have to come up with innovative ways methods to drive millions of miles without using the time or resources to drive millions of miles. That is where simulation testing came in.

“There are no simulations, modeling or other methods of proving safety, so it’s no surprise that federal guidelines have not answered this difficult question,” reads a follow-up to the original Rand report. It suggested the expectation that autonomous vehicles could reduce traffic fatalities by up to 90% might be overblown, but that the potential reduction in highway fatalities possible with assisted driving should be enough incentive to move forward, anyway.

“Even if autonomous fleets are driven 10 million miles, one still would not be able to draw statistical conclusions about safety and reliability,” said Susan M. Paddock, co-author of the study and Rand’s senior statistician.

In a January poll of self-identified connected-car drivers, 57% said they would not buy a self-driving car even if cost were not an issue, according to data-mobility provider Solace, which conducted the online survey of 1,500 people. Of the respondents, 49% said they are likely to rely on safety sensors like lane monitors/warnings or blind-spot detectors; 35% said navigational driving prompts are the audio prompt they’d be most likely to trust. But 40% said they wouldn’t trust a car to automatically respond to traffic with automatic brakes or other driver assists.

The evolution toward real autonomy will be slow and filled with gradually more-capable versions of driver assist systems that should make driving safer and build consumer trust through familiarity, said ANSYS’ Chang.

That could take years.

“It boils down to the metric that says vehicle accidents and deaths have to drop by a factor of 10,” said Anush Mohandass, vice president of business development for NetSpeed Systems. “But if we went from 40,000 casualties to 10,000 in autonomic vehicles, there would be an outcry. I would need to see hard data on road tests to know it works, and in my environment, because conditions in one place vary wildly from conditions in another. But this is where rational and irrationality come in. My rational brain tells me virtual or simulated testing are just as good as the real thing. But would I ever put my kid in a car we had tested only in a virtual space? No.”

Related Stories

Driving By Ethernet

Communications systems in cars are being re-architected as the volume of sensor data explodes.

Tech Talk: ISO 26262 Drilldown

What’s required to gain a solid foothold in the automotive electronics market.

ISO 26262 Statistics

Tech Talk: The statistical underpinnings of safety standards.

Regulations Trail Autonomous Vehicles

Chipmakers will continue to benefit, but it will be a long road until autonomous vehicles dominate the roads

Built-In Security For Auto Chips

Automated driving calls for much higher security in chips, subsystems, and systems—and that’s just the beginning.

Being a QA Engineer and interested in self driving cars, this is useful information. Thanks for sharing this interesting article. I’m intrigued