But working with this architecture has some not-so-obvious pitfalls, and new tools licensing options may be necessary.

The RISC-V instruction-set architecture, which started as a UC Berkeley project to improve energy efficiency, is gaining steam across the industry.

The RISC-V Foundation’s member roster gives an indication who is behind this effort. Members include Google, Nvidia, Qualcomm, Rambus, Samsung, NXP, Micron, IBM, GlobalFoundries, UltraSoC, Siemens, among many others.

One of the key markets for this technology involves storage controllers with multiple companies, said Krste Asanovic, co-founder and chief architect at SiFive, and chairman of the RISC-V Foundation. He described it as integration with memory, along with a PCIe slave that plugs into the back of a server, to provide very high performance flash storage.

Another active area is vector extensions for AI/machine learning. Asanovic has been leading this effort at the RISC-V Foundation, and SiFive is building cores to support vectors for AI machine learning.

“A lot of companies are interested in this space,” he said. “There’s a lot of dedicated hard-wired accelerators for the core parts of AI, but the problem with the hard-wired blocks is that the algorithms are changing incredibly fast in this space. It’s a very dynamic area. People would like something that is very efficient but also programmable, so the kinds of use cases we’re seeing are either that a company already has a hard-wired block and they want to add something that is complementary to handle the big port that the hardwired block doesn’t handle, or they want to do the whole thing with vectors. We’re working on advanced vector extensions that hopefully will be pretty close to the dedicated functional units, but have the flexibility to attack a lot of different algorithms in this space.”

Asanovic sees AI machine learning as one big injection point for RISC-V, particularly because there’s no incumbent in this space providing soft cores. “The vector extensions that we’re defining are significantly more advanced than the other ISAs. That’s one thing that’s leading people into RISC-V.”

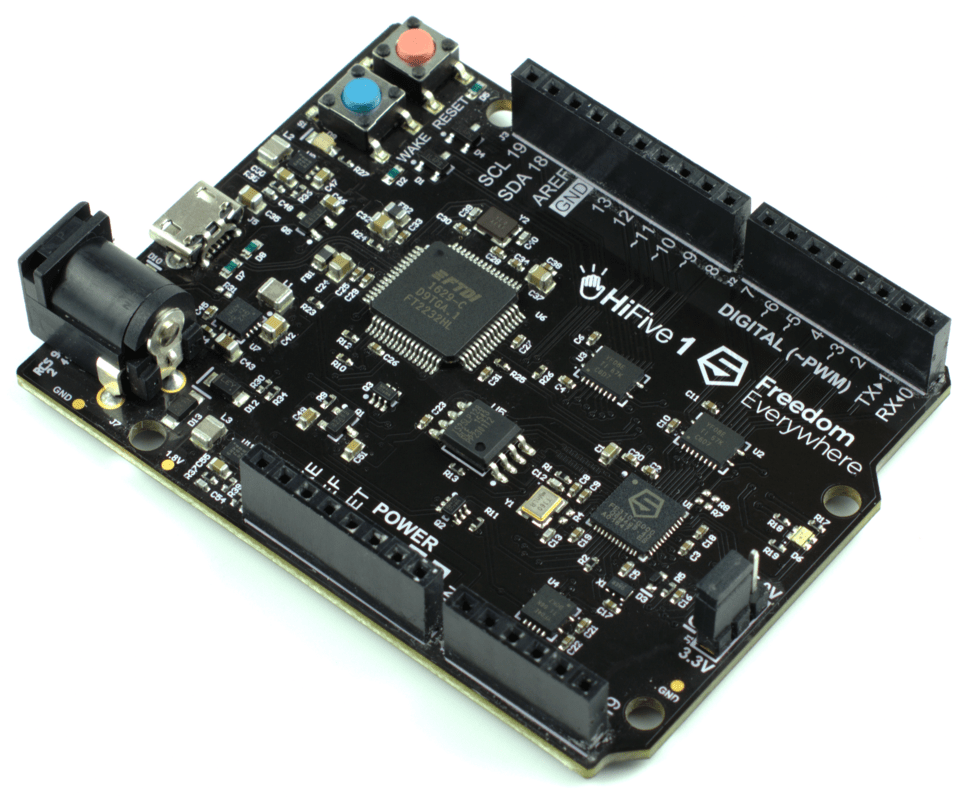

Fig. 1: SiFive’s HiFive1 developer kit. Source: SiFive

A third area with a lot of activity involves minion, aka management, cores. “These days most SoCs need a 64-bit address space because they have very large DRAM attached memory, and customers are looking for embedded controllers that can do housekeeping chores on large SoCs,” he explained. “There are often dozens of these cores on big SoCs, but they need to have a 64-bit address space and be compact. There really isn’t anything out there in that space, so the 64-bit embedded space is a place where SiFive [is focused]. In this area, Nvidia has announced publicly that they are designing their own cores, but that’s their use case, and they are switching to RISC-V for these microcontrollers.”

Dave Pursley, product management director in the Digital & Signoff Group at Cadence, sees similar proliferation of the RISC-V architecture. “It’s showing up all over the place, spottily, meaning all over the market,” he said. “A lot of smaller companies have come to talk about RISC-V, but there are pockets in some of the larger companies also. It’s not the only solution out there, and they all have pluses and minuses.”

The openness of the RISC-V specification has prompted the development of a plethora of open-processor designs. These processors fit a wide range of applications, from heavy-duty Linux servers with the Berkeley Out-Of-Order Machine (BOOM) CPU, to tiny embedded microcontrollers with the 32-bit PicoRV32, said Allen Baker, lead software developer for ANSYS‘ Semiconductor Business Unit.

“Several implementations have been successfully taped out and are in active operation,” Baker said. “From an EDA developer’s perspective, open RISC-V designs provide valuable insight into how modern designs are structured, and they serve as flexible test cases for tools. One trend that already has been observed is the ability to easily configure designs with high-level parameters (such as core count, cache sizes) and extend them with custom RTL blocks. This, combined with GNU-toolchain-generated RISC-V software providing a practically endless source of activity vectors, makes design analysis and optimization much more accessible and affordable.”

Implementation uses vary as well, Cadence’s Pursley said. “There are people that just want to use the ISA in one of the many vanilla flavors of it, and you can do that using IP from many sources, including open source. The companies that are pushing things a bit further really like the idea that its extensible and customizable. You can prune instructions out, you can add instructions in. The ones that are pushing the envelope tend to be the smaller startups, where they are looking at a fast, easy way to get to a customized instruction set for an application like machine learning. What they want to do is basically strip out everything they can, but at the same time add a few things in-possibly activation functions, or depending on the end application, maybe cryptofunctions, tensor manipulations (array and data space manipulations). Those are all things they are looking to add in, depending on the exact applications. That’s one of the neat things about this RISC-V architecture. It allows you to take things out and add things in, while still having the ability to use the existing RISC-V toolchain.”

Asanovic pointed out there isn’t anything specific needed in design tools to implement RISC-V. “One advantage we have is we generate our Verilog from Chisel, so our Verilog is very clean so it’s very easily ingested by the tools. We don’t have funky issues where you have to figure out what the Verilog means. We generate very clean, basic Verilog that works very with the vendor tools, so we don’t rely on fancy new Verilog features being correct in the tools, for example. That’s one benefit we have with generating the Verilog from our processor generators. We’ve found it actually both in back end flow and emulation flow, we’ve found customers are surprised how quickly the Verilog comes up because we generate this clean Verilog. The good news is that it can pretty much be used like any other soft core.”

License to innovate

Rupert Baines, CEO of UltraSoC, said one of the attractions of RISC-V is that companies can optimize core designs for their specific needs. “In effect, everyone who wants one has an architecture license.”

And while some commercial core vendors are focusing on low power, there are also SoC companies optimizing things very tightly for their specific application. For example, Codasip gave a presentation last year about how optimized instructions could dramatically reduce power, which is a very obvious path for SoC companies to go down.

Tensilica and others always maintained this for DSPs. The difference here is RISC-V is based on an industry standard ISA and ecosystem, as opposed to a custom design of a proprietary architecture, Baines said.

EDA vendors and large chip companies have maintained for years that the biggest way to impact power is at the architectural level. In effect, power needs to be considered up front in a design, where it’s easier to tweak designs.

“The attraction with RISC-V is that you can change the instruction set, you can change the implementation, you can go and work with different vendors,” said Neil Hand, director of marketing for the Design Verification Technology Division at Mentor, a Siemens Business. “But when you do any of that, you have to make sure that it’s compliant. You’ve got to make sure the things actually still work, so the vast majority of our focus around RISC-V has been on that verification and validation. “

For a few years now, groups within Mentor and other EDA providers have fostered new or existing relationships with RISC-V vendors and looked at building validation environments together. “That’s really the single biggest challenge when you look at optimizing a RISC-V design for low power, or any design really, even if it’s high performance,” said Hand. “You’ve got to make sure that thing still works, and that’s not easy.”

One obvious question is how a RISC-V design and verification flow may look differently from, say, an Arm-based flow. Here, the biggest challenge is knowing what to check, and understanding how to grade the design, Hand said. “If you think of an Arm design, you take an off-the-shelf Arm design, you can be pretty certain you don’t have to worry about what’s inside the black box. If you take a standard RISC-V IP, if you’re choosing a good IP vendor, you don’t have to know what’s going on inside the black box. Where RISC-V differs is you could go to an IP vendor that has a permissive license that lets them change what’s inside the box to add new instructions or change things. Then you do have to know what’s going on inside the box. You do have to verify that, and there’s all sorts of really interesting techniques you can apply. Most are just built on the well-proven standards for verification but it’s having that expertise and knowing what has been done. Any time there is a verification challenge, there’s an opportunity, and the interesting thing with RISC-V is there’s a huge amount of risk involved in changing a processor design. Then the question becomes, how do you then address that risk? On the low-power side of things, when you can change some thing and get an order of magnitude or more performance improvement in your design, it’s compelling.”

For instance, he recalled Microsemi at DAC 2017 showed how its engineering team made some minor tweaks to an audio processor for an IoT design. “It got them a 63X performance improvement, which actually meant they dropped down to an older process node, ran at slower speed, and got a huge power benefit. Once they did that, they of course had to verify that the instruction set was all still the same, that it all still worked and it ran the software.”

Fig. 2: Microsemi’s IGLOO2 FPGA architecture with RISC-V core. Source: Microsemi

Still, Hand asserted that it’s early days for this architecture. “All of the work we’ve done with verification of RISC-V is all being done either through IP partners or just through standard methodologies and standard environments. But the really compelling thing is, what can we do to make these things turnkey? When Mentor joined the RISC-V Foundation, as did Siemens, a big part of it was to understand how we could add value to the ecosystem. We want to get to the point where once compliance is well-defined, then we can start to provide verification environments off the shelf that work for those. As soon as you can do that, as soon as you can take the risk out of customizing these cores ¬- and you’re going to remove the risk by verification – then you can see more designs adopting the technology.”

To truly enable the kinds of anticipated designs by startups will require tackling some of these challenges.

“I’ve had this conversation both from the implementation side and the verification side,” Hand said. “It is enabling a new type of startup, and it’s enabling companies that can do something fairly innovative. You can build an IoT device using either an open-source core or a low-cost provider. Now if that person then comes to an EDA vendor, and we say, ‘That’s all well and good. Now you’ve got to give us $3 million for software,’ they’ll say, ‘That’s too much.’ Part of that is making the tools needed for this new class of designs on relatively small budgets, trying to bring innovative products to market, which they can do because the licensing costs are lower. The ability to modify the core means that you can now start to do some really cool things even on legacy nodes, which are frankly a lot cheaper than the bleeding-edge nodes. It’s lower risk and you can now start to see how can we as an EDA company start to be equally as innovative in how we give people access to the tools they need to actually bring these products to market.”

Conclusion

While it’s simple to view RISC-V in terms of what is already available in the market today, the architecture opens up new options in markets that are either still immature, or which are just starting to come into focus. The flexibility in the design adds the ability to be innovative in those segments. But it also can add uncertainty and new challenges because one implementation of the architecture can be significantly different from another, the the point where IP developed for one version may not work the same for another.

Still, RISC-V is rooted enough that established companies are backing it today as one more choice for inclusion in systems. And while it seems unlikely that it will displace other companies or processor designs, it seems to have plenty of momentum and room for growth on its own and in conjunction with other vendors’ processor cores. But to really live up to its potential, new licensing models may be required for the design tools.

Related Stories

SiFive: Low-Cost Custom Silicon

Company seeks to build solutions based on open-source processor cores.

IP Challenges Ahead

Part 2: For the IP industry to remain healthy it has to constantly innovate, but it’s getting harder.

The notions of adding/removing instructions is absurd in the general case.

“Backward Compatibility” was practiced for decades because of legacy code, and that is exactly the IP/verification situation because there is no way of knowing everyplace an instruction has been used or if it was used at all.

Adding an instruction only makes sense if GCC will use it. (unless assembly level programming is going to be the “new” way).

John Cocke won the Turing Award for inventing RISC because compilers not able to handle complex instructions.

This road has been traveled before.

I really want to try out the HiFive1 developer kit. But I can get an Arduino, MSP 430, PIC-8, STM32 ARM board, or an ARM board with video out like the Raspberry Pi for $10-30 less with more support.

@Karl Stevens:

The core instructions are always the same. But there are extensions that can be integrated.

There are standardized extensions like the compressed instruction set, which is similar to the Arm Thumb-2, or other multimedia extensions similar like the MMX, etc.,

and these extensions get implemented somehow into the GCC. But there is space for non-standard extensions. And this is the nice thing, where everyone can implement non-standard extensions for their own individual needs. Of course, this is not integrated in the GCC. This, at the end, results in high flexibility and efficiency and everyone can tweak the core to his needs.