Mark Bohr opens up on the company’s push into multi-chip solutions, and upcoming issues at 7nm and 5nm.

Mark Bohr, senior fellow and director of process architecture and integration at Intel, sat down with Semiconductor Engineering to discuss the growing importance of multi-chip integration in a package, the growing emphasis on heterogeneity, and what to expect at 7nm and 5nm. What follows are excerpts of that interview.

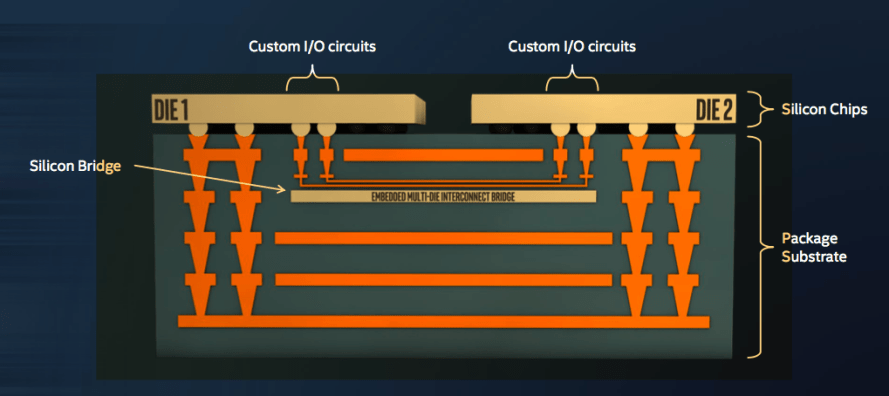

SE: There’s a move toward more heterogeneity in designs. Intel clearly had this in its sights when it developed its Embedded Multi-die Interconnect Bridge (EMIB). What’s behind this development?

Bohr: I agree that our industry, including Intel, is heading toward more heterogeneous integration, whether it’s packing different types of circuits onto one SoC or using different multi-chip packaging technologies. EMIB is one of the latest inventions for multi-chip packages by Intel. The basic concept is that you can take different chips on one plane or in one package and connect them together with  high-density interconnects using embedded silicon bridges. That’s a much more effective way of connecting together multiple die than using normal package traces because they tend to have worse density and looser pitches, so you can’t get very high bandwidth connection between two or more chips on the package. EMIB allows you to do that, allows easy heterogeneous integration where you can be connecting a memory chip to a logic chip next to a communication chip. You can pick and choose the technologies that are best for each function.

high-density interconnects using embedded silicon bridges. That’s a much more effective way of connecting together multiple die than using normal package traces because they tend to have worse density and looser pitches, so you can’t get very high bandwidth connection between two or more chips on the package. EMIB allows you to do that, allows easy heterogeneous integration where you can be connecting a memory chip to a logic chip next to a communication chip. You can pick and choose the technologies that are best for each function.

Fig. 1: Embedded Multi-die Interconnect Bridge. Source: Intel.

SE: Are you finding any use for FPGAs in those packages alongside ASIC CPUs?

Bohr: Our 14nm FPGA product—from a team that used to be Altera—is using this embedded bridge technology to connect the main FPGA chip to high-speed transceivers. That’s a good compact way of doing a FPGA, and using the right technology for the right piece. It’s one technology for the high-speed transceivers. Alongside it you can have the FPGA fabric chip with Intel’s 14nm technology with its density advantages alongside the FPGA fabric chip.

SE: One of the rationales initially for why all this stuff would be packaged together was that analog would be developed at 90nm or 130nm and the digital logic would be developed at a different node. But most of what we’ve seen out of the packaging at this point has been homogeneous in terms of the process technology. Is that going to change?

Bohr: Our intent going forward to make use of different process technologies to do heterogeneous integration. And there are at least two advantages to that approach. One is you might get better circuit performance or power if you tune the process to the individual circuit function. The other advantage might be time to market. You may want to put together a system and some pieces you can pull off the shelf and put into this multi-chip package. The other piece might be that new chip using the new technology that you just developed.

SE: But IP that can work with these various interconnect technologies—interposers or your EMIB—have to be been designed specifically for that purpose, right? Not just any off-the-shelf component can be used.

Bohr: That’s true. With an embedded bridge you can’t take a chip off the shelf because it won’t have quite the right I/O circuits or quite the right connections to the embedded bridge. So you have to redesign that part of the chip. But that’s a trivial part of the chip.

SE: Who is going to handle this? Is Intel going to be selling these pieces or are you pulling in third-party IP to pull this together?

Bohr: It may differ by market segment. Initially, most of this is internal Intel pieces.

SE: Do you have a list of all these IP blocks that potentially can be used in this? Is there a menu that people can go through?

Bohr: I can’t really say that’s the case. Embedded bridge technology is primarily intended for our internal product applications. It can be offered as a foundry offering, and of course the foundry customer can choose what chips they want to include in this package and how to connect them together.

SE: Is ARM part of this?

Bohr: ARM is part of our foundry offerings now. We offer ARM IP, which can be put on Intel chips and be part of an Intel chip, whether it’s a 14nm or 10nm chip. That’s an IP block, not necessarily a separate chip.

SE: Is the idea of using 3D chip stacking using TSVs still alive?

Bohr: There are thermal issues to contend with. You can’t put two high-power high-density chips one on top of another because it’s difficult to draw heat out of the lower chip and it can get too hot and not function properly. 3D chip stacking is still an idea for the lower-power mobile space, where high power densities aren’t a particular problem and where package footprint is a premium—where you’re willing to do things like chip stacking to get a small package footprint. But in that market, power density is not as significant as it is on a mainstream laptop or server type chips.

SE: How does cost compare for these new packaging technologies versus everything in one chip?

Bohr: Doing a large SoC chip is costly in two respects. First, a large chip won’t yield as well as two smaller chips. Second, with large SoC chips you may have a lot of additional masking steps to provide all the process features you want. This also drives up the cost of the chip. You can imagine in some cases two smaller chips, each with their more optimized process, may cost less and maybe get better performance power characteristics than one large SoC chip.

SE: Anything that companies working with this technology will have to keep in mind in terms of the chip design specs and various IP blocks?

Bohr: A high-bandwidth silicon bridge is an important process technology capability, but there are design capabilities, as well. We designed some special dense and low-power I/O circuits to communicate across that embedded bridge chip. You have two chips talking to each other with relatively small and low power I/O circuits. You want to develop both to get the biggest benefit from that combination.

SE: How far does your roadmap extend on nodes? How much visibility do you have?

Bohr: We’ve been shipping 14nm products for more than three years now, and that’s been a very successful technology for us. We’re on track to begin shipping 10nm products in the second half of this year. 7nm technology is already well into its development phase, so I know it will happen. And I’m spending most of my time on our 5nm technology, and we have some interesting and viable ideas to make that happen. For Intel, each of those generations and node names are about a two-times increase in transistor density, so they continue to follow Moore’s Law. They are still fairly represented by the node name. For other companies, you can’t tell too much from the node name. Intel is still consistent with the past trends of doubling transistor density with each node. Our 14nm delivers a logic transistor density of about 37.5 million transistors per square millimeter. Our 10nm technology delivers about 100 million transistors per square millimeter, so it’s more than a 2.5 times density increase. For the other companies just shipping 10nm, we expect what they call 10nm to have a logic transistor density measured close 50 million transistors per square millimeter, not the 100 million per square millimeter that we offer on our 10nm. It’s almost a full generation difference.

SE: Will any new materials be required as you start pushing down into the real 7nm and 5nm? What will those transistor structures look like?

Bohr: I can’t divulge specific answers, but we do have some promising process flows and materials that allow those transistors and interconnects to scale in a way that is consistent with the goal of roughly doubling transistors density with each new node. Both transistors and interconnects require continual inventions and new materials. It’s not just taking the same structure and shrinking it.

SE: One of the biggest issues is the contacts as the most advanced nodes. Is that still an issue?

Bohr: It’s a challenge in being able to print them and pattern them, and also a challenge in terms of giving a low enough contact resistance. The area is reduced by a factor of two times, so resistance per contact will go up just based on the area of that component. Also, the desired materials don’t always fit in the contact when it’s made smaller, and that is also a reason for contact resistance to go up. That’s an example where we have to pay close attention not just to shrinking features size, but finding ways to develop better materials and ways to achieve low resistance and good performance.

SE: There are predictions that bulk CMOS will run out of steam, but Intel has been able to extend it for years. Is there an end to this?

Bohr: It depends on how you define bulk CMOS because we went from planar to finFET. That’s a big change, and that’s been paying rewards in terms of the ability to scale. Beyond that, will we have to go to the nanowire or non-silicon channel materials? The answer is, eventually, yes. We’re exploring both of those avenues to enable transistor scaling. But the capabilities of today’s CMOS transistors are pretty darn good in terms of delivering high performance and low leakage across a wide voltage range. It’s pretty tough to surpass today’s capabilities or to replace present structure and materials and still meet that broad range of device requirements.

SE: Two other issues keep coming up. One is process variability as you get down to the single-digit feature sizes. The second involves quantum effects at 5nm. What are you seeing?

Bohr: There’s variability of devices, whether it’s transistor or interconnect variability, and there are quantum-mechanical effects when you get to very small transistor dimensions. We have to find ways around both of those. In the case of interconnects, at 14nm we introduced spacer-based patterning. Instead of a photoresist feature, it’s defined by a spacer that can be deposited at pattern width. That’s much better line-width control than any resist. It’s an example of new materials, process flow or structures to help to improve a growing variability problem.

SE: What happens with quantum effects? Is it that electrons don’t move through materials at a consistent rate, and therefore less deterministic?

Bohr: The biggest concern is tunneling when a barrier to electron flow gets too small—either the gate oxide thickness is too thin, or the depletion region around junctions is too narrow. You don’t have the on/off control that you had before, so we have to find ways of avoiding or suppressing that problem. We have to find a way forward on the 5nm generation.

SE: Intel is using air-gap technology. How does that fit in to the whole picture?

Bohr: We introduced air-gap technology at 14nm for two metal layers. That provides a nice capacitance reduction. It did add to the cost of a masking layer in each case, so we have to carefully weigh the value of the lower capacitance versus the cost of adding an extra mask. That’s still an option going forward, but it will be decided on a product-by-product basis.

SE: Bringing this back full circle, what does a chip look like after 5nm? Is it a mix of things? Is it a very limited amount of logic that goes into 3nm/5nm mixed with something else, or is everything still on one chip?

Bohr: With the wider range of multi-chip package technology available to us, whether it is a traditional MCP (multi-chip package) or an embedded bridge or whether it’s die stacking, that certainly gives us an extra degree of freedom of how we optimize a product or a system. We can put it all on one chip, and in some cases that may be the best solution, or we may break it up into two or three separate chips and connect them together in some way. Now you can have each chip better optimized for its respective circuit function.

SE: And you can start getting around some of the problems that show up as you start shrinking the diameter of wires, such as RC delay, because you can use a fatter pipe for communicating over shorter distances. But where do you draw the line for not shrinking everything?

Bohr: That’s a hard question to answer, but you touched on one important point. High-performance chips tend to like wider wires. Dense low-power circuits like graphics still prefer the smallest dimensions—the highest transistor density that you can pack. We can still do that on one piece of silicon. We can still have one part of the chip where we use wider wires for better performance and use minimum pitch wires in other areas where we want the best density.

SE: And this is the big change in Moore’s Law, right? It’s not that you can’t shrink features. It’s that the need to shrink will vary by application.

Bohr: That’s a fair point. It’s no longer a one-transistor-fits-all, like where we were maybe 20 years ago when we would develop a chip that could serve many purposes, whether it was an SRAM memory chip or high-performance logic chip. That is becoming harder and harder to meet with just one technology solution. You need at least a system on chip—a chip that offers a range of device types tuned to specific purposes—or multi-chip solutions going forward. New products in the future won’t be based on just one technology or one transistor type. It will be how well you combine different technologies into a small package.

SE: Are you starting to run into any physical limits in terms of how far you can push some of the materials such as thin films? Do we need to start developing new areas in the industry?

Bohr: One very valuable enabler, now of course many years old, is atomic layer deposition (ALD), where you can deposit one molecular layer at a time. With great precision, you can put down a film that is now exactly 4,5,6 atoms thick, but it’s also very conformal—it can go down sidewalls on some features as well as across the top surface. ALD has been an important enabler in some cases—in particular with high-k gate dielectrics. The problem with ALD is you can’t deposit any film you want. There are only certain films where the chemistry allows it to be deposited in that manner. ALD has proven useful. In the meantime, we’re still using a variety of PVD and sputter films, but there are challenges in getting them down to the thickness that we want and the conformity that we need.

SE: ALD is also notoriously slow, same with ALE. Is that starting to add to the cost of that?

Bohr: If you are only depositing a few molecular layers, then speed is not the issue. If you want to deposit one-micron thick film, then you wouldn’t obviously choose ALD. There are plenty of CVD or sputtering techniques that will do those types of films for you.

SE: Looking out at the challenges you have to wrestle with, what worries you most?

Bohr: Scaling all these feature sizes in a cost-effective way, but being able to do it with high yield. You don’t always know the yield problems until you are halfway into a new technology. Having a way to quickly identify what all the sources of defects are and then fix them is what I worry most about—making sure we achieve the high yields we have in the past.

SE: Could you do this and develop it effectively if you didn’t have your own fab there?

Bohr: We have a development fab here in Oregon right next to me the size of 4.5 football fields that runs 24 hours per day, 7 days a week, 365 days a year. We need that fab capability, not just to do the basics, but for learning how to put it all together to get it to yield and meet the performance and reliability targets that are needed.

SE: Will that serve you the same way as you start mixing and matching all these different components into the different packages?

Bohr: Yes, I think so. It’s still a unique advantage to have a world-class process development team and world class research team. When we come to develop a new technology, we can tap our research and development engineers and also engage our product design engineers to help us set the right process targets.

SE: Intel has a foundry as well that is open to the general public. You’ve had a number of high-profile customers in the past, one of whom you bought. Where do you see your customers going? Are they pushing down into the latest and greatest nodes or are they sitting back at 14/22nm?

Bohr: Most of the interest is in our 10nm technology, which is clearly a leadership technology. Mostly, they come to us for that. And, of course, for high performance and low leakage/low power.

SE: But it’s no longer a classic shrink and you get the automatic returns, right? Now, you shrink and there are other things you have to do in terms of routing of signals, avoiding contention for resources, and those sorts of things that you didn’t have to worry about in the past.

Bohr: Good point. In the past, shrinking technology and getting improved performance was like falling off a log. It just came to you. That’s no longer true. When you scale wires, they get slower and the interconnect gets slower. The transistors are no longer faster when you scale them. You have to invent new materials and structures to get the speed that you want. What does still come naturally is a reduction in dynamic capacitance, which means a reduction in active power. Scaling still delivers lower power per function as well as improved performance when you need it and where you need it.

SE: But dynamic power density does become a bigger problem, right? Yes, your power goes down but total dynamic power density and risk of things like self-heating go up?

Bohr: To a degree. Active power does come down, but you’re right that power density does go up slightly. It’s a product by-product issue, but power density is still holding its own from our perspective.

SE: We’ve been hearing about the push to parallelism for many years. There seems to be a whole new effort where this is truly starting to fit into a lot of applications well outside of the classic database market and embarrassingly parallel types of applications like video and image processing. What does that mean in terms of how you scale a chip?

Bohr: Parallel computing certainly can take better advantage of scaling of Moore’s Law than traditional CPUs cores that rely on single-threaded performance. Scaling works well with the graphics circuits, where performance can be measured by how many more execution units can be fit on your die to do the graphics processing. Singled-threaded cores are likely to have a higher frequency, and that’s where you need wider wires and high performance transistors. But if we can bring more parallel computing techniques to those types of functions, it will work better going forward.

Related Stories

Moore’s Law: A Status Report (April 2017)

The ability to shrink devices will continue for at least four more nodes as EUV begins to ramp, but it’s just one of a growing number of options.

Uncertainty Grows For 5nm, 3nm

Nanosheets and nanowire FETs under development, but costs are skyrocketing. New packaging options could provide an alternative.

Inside Lithography And Masks

Experts at the table, part 3: EUV, DSA, nanoimprint, nanopatterning, and best guesses for how far lithography can be extended.

Inside Next-Gen Transistors

Coventor’s CTO looks at new types of transistors, the expanding number of challenges at future process nodes, and the state of semiconductor development in China.

Thanks for a substantive interview.