New technical paper titled “Low-Overhead Reinforcement Learning-Based Power Management Using 2QoSM” from researchers at ETH Zurich and Georgia Tech.

Abstract

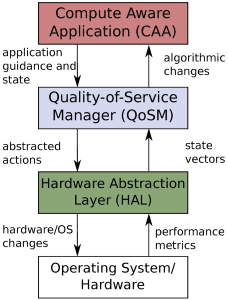

“With the computational systems of even embedded devices becoming ever more powerful, there is a need for more effective and pro-active methods of dynamic power management. The work presented in this paper demonstrates the effectiveness of a reinforcement-learning based dynamic power manager placed in a software framework. This combination of Q-learning for determining policy and the software abstractions provide many of the benefits of co-design, namely, good performance, responsiveness and application guidance, with the flexibility of easily changing policies or platforms. The Q-learning based Quality of Service Manager (2QoSM) is implemented on an autonomous robot built on a complex, powerful embedded single-board computer (SBC) and a high-resolution path-planning algorithm. We find that the 2QoSM reduces power consumption up to 42% compared to the Linux on-demand governor and 10.2% over a state-of-the-art situation aware governor. Moreover, the performance as measured by path error is improved by up to 6.1%, all while saving power.”

Find the open access technical paper here. Published May 2022.

Giardino, M.; Schwyn, D.; Ferri, B.; Ferri, A. Low-Overhead Reinforcement Learning-Based Power Management Using 2QoSM. J. Low Power Electron. Appl. 2022, 12, 29. https://doi.org/10.3390/jlpea12020029

Source: technical paper “Low-Overhead Reinforcement Learning-Based Power Management Using 2QoSM“

Leave a Reply