Emulation adds new capabilities that were not possible with simulation.

The expansion of emulation into new fields, rather than just functional verification, is making it possible to do power analysis over longer spans of time. The result is a fast and effective way to analyze real-world scenarios.

This is a new field, and it marks a new use of this technology. While it is still evolving, several ideas have surfaced about the best methodology and the best way to utilize an emulator.

There are two aspects to power verification. The first is more akin to functional verification, where the verification is associated with power intent, as described in a Unified Power Format (UPF) file or similar format. The second is dynamic power analysis, which is used to find both the peak power and average power over periods of time much greater than could be achieved using simulation.

Each of these use cases—power intent, peak and average power—require different capabilities in the emulator and in the software performing the analysis. The industry is still looking for the best ways to allow users to address these in the most efficient manner.

Verifying the power intent

Power intent is usually specified in a UPF file. “It articulates all of the regions you are dealing with in the design, the areas that you want to switch on and off, or to voltage regulate,” says Frank Schirrmeister, group director for product marketing of the System Development Suite at Cadence. “What we do is to mimic the effect of the power intent while doing simulation. If the design switches off five regions, then we simulate the design as such and make sure that the switching is going on correctly. We do the same with emulation. We literally create the logic, which gives you the power isolation for each power region. When it is switched off, the emulation logic simulates the effect, and the same for when you come out of a deep shutdown. What happens to the data? Is it initialized or randomized? This is verification of the power intent.”

Power analysis

The biggest strides have been made in power analysis using the emulator. “Power analysis or power estimation is based on tracking the switching activity of all elements inside the design,” says Lauro Rizzatti, a verification expert. “Average power consumption is estimated by cumulatively collecting the switching activity, regardless of the timing or cycle when the activity actually occurred. Peak power consumption is calculated by tracking the switching activity on a cycle by cycle basis.”

While all of this was possible in the past with simulation, design size and complexity meant that important aspects of the design could only be reached by long runs, often using some or all of the production software executing on the processors within the chip. Thus, the limitations of simulation were putting chips in danger because they could fail to find all of the problematic areas or some of the opportunities for making additional power savings.

It also enables better design. “There are a few design applications that are more concerned with longer runs than others,” says Preeti Gupta, director for RTL product management at Ansys. “For example, consider graphics where they are interested in the power consumption for a complete video frame. This is 10s of milliseconds. Before, they had to extrapolate from a subset of the frame. Now they are running it flat to understand what the power consumption is.”

There are several views that can help understand power consumption. “You can create an activity plot, which is a pictorial representation of toggling,” says Jean-Marie Brunet, director of marketing for the emulation division of Mentor Graphics. “The activity plot shows you the windows around which you may need to do a peak analysis. This would be done by creating a file that contains the activity per net. Here you are capturing the activity on the entire SoC. You can pass this to an analysis tool.”

There are different flows based on end-user applications. “Mobile cares about their battery life so they are concerned with total power over the duration of a test,” says Brunet. “They also care about peak currents and try to understand the budget for power, due to electromigration (EM) and IR drop, as well as the constraints of the package that the chip will fit into. This is also dependent on peak current.”

It is fairly simple to get power numbers from toggle activity. “So long as we continue to use CMOS logic, the power equation is quite simple,” says Drew Wingard, chief technology officer at Sonics. “It is just activity multiplied by CV² plus leakage. So you need the average activity on the average node or specific activity on every node.”

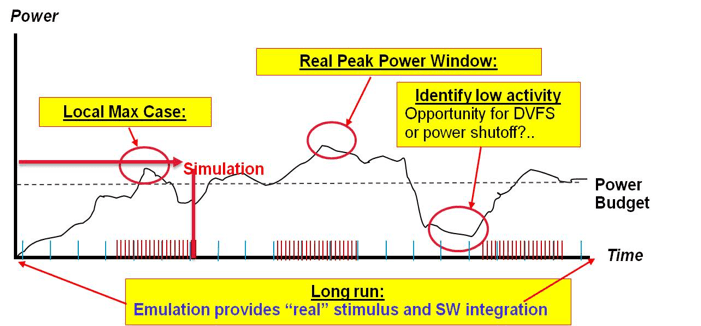

As Figure 1 indicates, choosing the right scenarios could make the difference between finding the areas that have to be addressed and the opportunities for savings, or releasing a chip that has problems. “Most people use the same use cases they used for performance analysis with a few extra ones,” says Wingard. “There are some cases that may be interesting related to standby mode that are not relevant for performance. These are often lower power use cases, but for average and total energy, they already have suitable use cases that are being analyzed for performance.”

Figure 1: Emulation extends the reach of power analysis. Source: Cadence.

Still, Mentor’s Brunet warns of problems that may exist with this approach. “To capture activity you can do it the old fashioned way of looking at the functional testbench and try to adapt that to power. But that is usually a recipe for disaster.”

Brunet suggests that benchmarks should be run on the emulator. “This is real usage. It enables you to capture activity accurately in the context of how the chip will be utilized. With mobile and multimedia, most of the end designs will run through a benchmark reference such as AnTuTu. At the end of the day, the chip will be selected based on these benchmarks. There are certain aspects of these benchmarks that focus on power. This is a change of methodology and requires booting the OS and this means running a lot of cycles, often hundreds of millions of cycles.”

There is another emerging methodology for creating testcases related to the Accellera effort. “Here you can define scenarios, which are executed on the design, that look at issues such as, ‘Will my cache coherency be impacted if I run at the same time as I am going to access something or shutting down a region of the design?'” explains Schirrmeister. “Portable Stimulus helps with the creation of this kind of scenario and can then be executed on the emulator to get the dynamic activity data.”

Adds , chief executive officer of Breker: “Portable Stimulus enables scenario models developed and debugged in simulation to be re-used in emulation to generate bigger tests. For power analysis, complicated scenarios are first generated as small tests for simulation. Then, with slight tuning, more robust tests can be generated for emulation. Portable Stimulus can coordinate what’s happening in software-driven test with what’s happening within the testbench and can easily include actual pieces of the software when they become available.”

All of that activity could mean huge files that consume lots of disk space and take time to write and read. Wingard suggests there are other ways to whittle the file sizes down. “You do not have to watch every node in every cycle, but could use some intelligent sampling techniques to get a sense for what is happening.”

Emulation vendors are indeed looking at multiple ways to reduce the length of runs and files sizes. “If you try and capture all of the activity it is a lot of data,” says Schirrmeister. “Instead, you can have a methodology that allows the user to hone in from a weighted toggle analysis. Then you can do a full activity analysis. So you find the windows at which you want more detail.”

There are other approaches to try and get around the file size problem. “When you capture every net on every cycle for a full SoC, you may have 200 million nets and a benchmark may run for 500 or 600 million cycles. That is a huge file and takes a long time,” admits Brunet. “If I am doing a detailed analysis around a peak, then the file is not that large. So it really is about the use model. But there are times when you really do need the complete data set and this is why we created a streaming API that bypasses that problem.” The streaming API enables the emulator to send data directly to a power analysis tool saving the time associated with file reading and writing.

At the end of the day, compromises also can be made that trade off speed and file size with accuracy. Cadence provides three alternatives that do not require a full data dump.

Figure 2: Some possibilities for reducing file size. Source: Cadence.

Many of these techniques cannot provide an actual power number, but that may not be necessary. “Some people want to know how the chip behaves over a duration of time in terms of finding the power peaks,” says Ansys’ Gupta. “It’s not necessarily the magnitude of the power, but what is causing the peaks and valleys to happen. Once you have found where the peaks are, then you can do more fine-grained analysis. If you are not trying to come up with a number, you may be surprised how much you can gain on runtime while not giving up that much in accuracy of the shape of the profile. That profile addresses a pent-up need and it is being applied in several ways.”

Another accuracy tradeoff is associated with model abstraction. “Until two or three years ago you had to wait until you had the gate-level testbench, so you were close to silicon,” says Brunet. “That made it very difficult to react. If you have complete RTL and find something through the benchmark, then you do have some time to react. But there are some customers who want to start the analysis before the RTL is complete, where some blocks may only exist at a high level of abstraction.”

With emulators it is possible to integrate such blocks as virtual components. “An example may be the CPU,” continues Brunet. “Now the discussion is how accurate do you need it to be? It will run much faster than RTL, perhaps 10 to 50X, but there is no free lunch because you lose accuracy. If you start with more abstract models, then you cannot get an accurate number. But most people do not attempt to get a number. They will stop at higher levels of analysis, which is really architectural profiling.”

If you are after accurate numbers, you really have to wait until after the first layout has been performed. “Once you have a trial layout you can get more accurate power estimates,” says Wingard. “The RTL on an emulator has a different netlist, it doesn’t have the layout data and wire lengths and the clock network is a pre-engineered structure. But after a layout, I can work out what is happening with the clock trees. It may be too late to drive architectural choices but plenty early to warn you about power or thermal issues.”

Gupta explains exactly how this works. “The RTL does not have clocks trees or parasitics. It does not consider glitches. It does not have power distribution. It does not have clock gating. These things get decided downstream. The emulator is creating a trace of logical activity for each named RTL net. The power analysis tool then models the clock tree and all of the other physical effects. Power analysis tools have to do all of the modeling to get the complete model of activity. This includes handling all of the power intent information that may come from a UPF file. From the UPF constraints, we can ascertain when blocks will be on and off, and by monitoring the activity of those control signals, we can compute zero power for the logic that has been powered off. We have to model the isolation cells and the retention cells and level shifters, because some of this logic will remain on and consuming power even when the block is shut off. So the emulator does not have to worry about the physical design aspects.”

Post-layout data also makes additional types of analysis possible. “At RTL, we don’t know the size of the power switches so computing things like inrush currents are difficult,” continues Gupta. “So you cannot perform transient analysis at RTL. But you can do cycle averaged analysis. If a back-end run has been performed, then more accurate numbers can be back annotated. We read in physical design data when it becomes available and use that to augment RTL power accuracy. Physical design data is an enabler in that you can extract a lot of data, but what you do with it is important.”

Conclusions

Power analysis is becoming a lot more important than it was in the past and simulation is not up to the task. Emulation has taken on the task and is looking at providing multiple tradeoffs between accuracy, the speed at which data can be collected and analyzed and the point in the flow at which analysis can begin. Solutions now exist that can provide architectural profiles all the way down to the back annotation of layout data that can provide levels of accuracy only thought possible from gate level analysis. This is one area of EDA where there is a lot of active innovation.

Related Stories

Emulation’s Footprint Grows

Why emulators are suddenly indispensable to a growing number of companies, and what comes next.

Electromigration: Not Just Copper Anymore

Advanced packaging is creating new stresses and contributing to reliability issues.

Getting The Power/Performance Ratio Right

Concurrent power and performance analysis is essential, but the path there is not always straightforward.

Does Power Analysis Need To Be Accurate?

Debate rages over what is needed for analysis, estimation and optimization.

Leave a Reply