Performance may appear to be the deciding factor, but in the end power usually wins.

Power is emerging as the dominant concern for embedded processors even in applications where performance is billed as the top design criteria.

This is happening regardless of the end application or the process node. In some high-performance applications, power density and thermal dissipation can limit how fast a processor can run. This is compounded by concerns about cyber and physical security, which require power, as well as a growing list of wired and wireless I/O protocols that can impact the overall power budget.

“Other areas that should be looked at include real-time and safety, to ensure how time-deterministic and reliable the processor can be in mission critical applications, and also extended usage conditions,” said Suraj Gajendra, senior director of technology strategy, Automotive and IoT Line of Business at Arm. “For example, can the processor consistently operate across a wide range of temperature and environmental conditions? Architects and engineers must consider all these requirements and potentially make tradeoffs based on the application where the processor is being deployed.”

As with all designs, tradeoffs and architectural decisions for high-performance embedded processors come down to what exactly you are trying to do with those processors, said Pulin Desai, group director, product marketing and management for Vision and AI DSPs at Cadence. “There are a lot of different things being done in automotive, so the edge in automotive could mean processing on the bumper or on the sideview mirrors. In any of these things, at the end of the day, even though people may think the power is not as important because you need certain performance, on edge devices low power is very important.”

This is true for driver monitoring systems, as well, which may contain an image sensor or a radar sensor. “Here, too, the power and performance are important,” Desai said. “In that monitoring system, it is also important that it works when there is no light. If you are driving at night, you want to see if the driver is falling asleep or not, or how many people are in the car. So then maybe some radar comes in the picture, or an IR-based device is utilized. In addition, there is a massive chip to do everything as you go toward autonomous driving at perhaps Level 2+ or Level 3. Many sensors come into the picture for that, and design teams are looking for a certain level of image processing.”

Even for high-performance embedded AI processors, where the focus is on tera-ops per second (TOPS), the underlying metric is TOPS per watt, Desai said. “People will give you the high TOPS number, but that’s not the final measurement. They are looking at the performance over power, or the TOPS over watts. That’s the most important thing because within certain applications, it’s about how much power you’re going to use. Throughout everything, power continues to be the most important consideration.”

Measuring power consumption

Understanding how power is utilized in a complex finFET-node chip requires a combination of full-chip simulation early in the design cycle, as well as in-circuit monitoring throughout its lifetime. Both are essential for maximizing performance.

“Even if they are not at the extreme end of chip size and power, any high-performance processor on an advanced node often includes multiple cores and can consume significant power in the tens of watts,” said Richard McPartland, technical marketing manager at Moortec. “In-chip monitoring should be considered early in the design cycle. Thermal challenges are best addressed by embedding temperature sensors near known or potential hotspots, with typically several sensors per core. Adding temperature sensors allows throttling to be minimized to ensure maximum data throughput.”

A further challenge is the power distribution network, which becomes even more complex if the chip is part of a 2.5D or 3D package. “Including voltage monitors that support multiple voltage sense points for all the critical blocks, where speed is closely correlated with supply voltage, will be invaluable at the chip bring-up and optimization stage,” McPartland said. “This will enable the power distribution and IR drops to be checked and compensated for so that performance can be optimized. Finally process monitors, in addition to providing a good measure of process speed, can help to reduce voltage guard-bands through voltage scaling schemes to address process variability and also monitor and compensate for aging.”

All of these factors depend on a number of factors, ranging from the overall SoC architecture to the kinds of computations that embedded processors are used for. In cryptographic applications, for example, a DSP or AI algorithm dominates the requirement for performance. In other cases, high performance may be needed over a broader set of operations.

“In the former case, creating a domain-specific architecture is optimal,” said Roddy Urquhart, senior marketing director at Codasip. “In this case, there is much activity in the industry around using the RISC-V ISA, and creating custom instructions will deliver high levels of specialized performance.”

A number of companies offer tools to allow architectural exploration and automatic generation of hardware and software development kits for RISC-V. In cases involving more broadly based performance, using a dual-issue rather than single-issue core will significantly improve throughput, as Western Digital has done with its RISC-V SweRV Core EH1 core. The two approaches could be combined, for example, adding domain-specific instructions to the dual-issue EH1.

But developing embedded processor cores that are common across different application areas can be a challenge, especially for applications in storage, networking, wireless and automotive. Nevertheless, there are some commonalities.

“With storage and networking, we’re seeing a need for larger address spaces,” said Michael Thompson, senior product marketing manager for ARC processors at Synopsys. “Then, between storage, wireless and networking, and also to some degree in automotive, there is a need for larger clusters. A customer may say, ‘I really need to go beyond eight cores, and I’d like to do that in a multicore processor, such as a single processor with more than eight CPU cores.’ We are also seeing a growing need for hardware accelerators. In storage, design teams will attach up to three accelerators through custom instruction capabilities. But a lot of engineering teams want to do some of that through the hardware accelerator/co-processor interface for things like computational storage, artificial intelligence, and those kinds of things inside of storage controllers. In wireless, there’s a real need for hardware accelerators. In automotive, where things are still evolving, there is a need for larger cluster capabilities in a few applications, with an increasing usage of hardware accelerators expected there, particularly for artificial intelligence.”

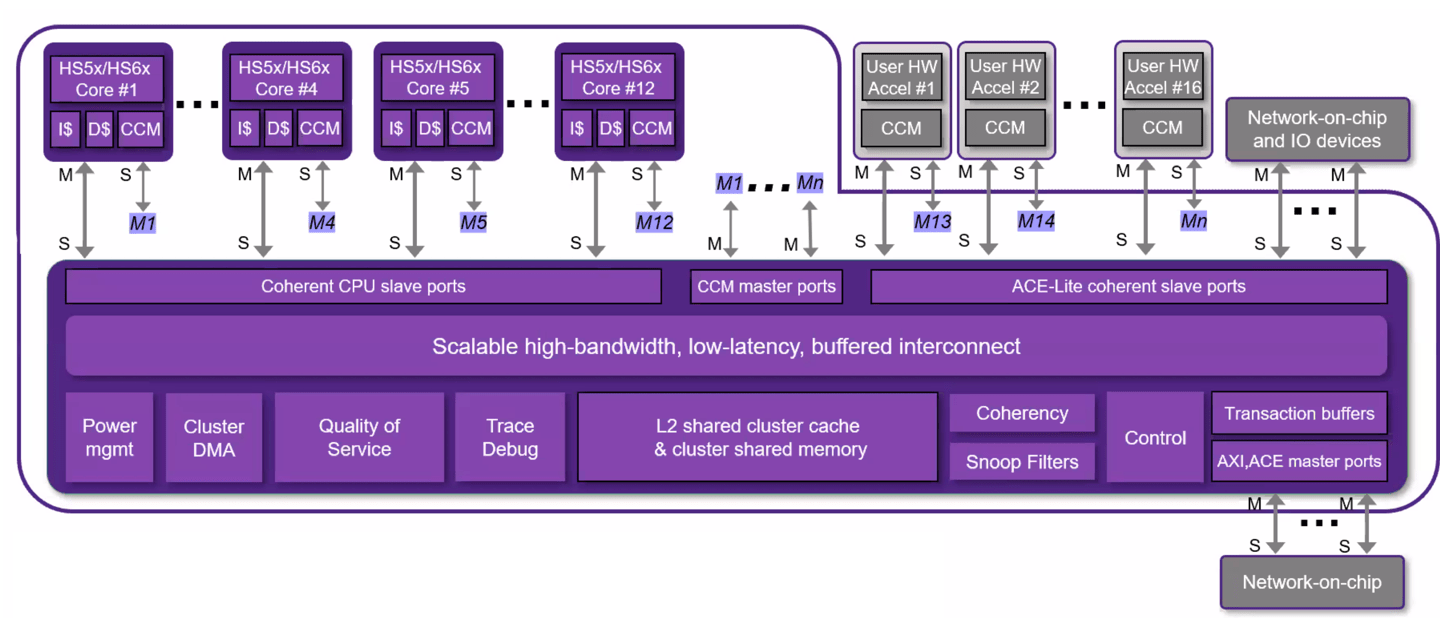

Fig. 1: Synopsys’ new embedded ARC processor design. Source: Synopsys

Memory matters

While many decisions come down to TOPS per watt, bringing memory bandwidth into the picture is required as part of the architectural decision of a high-performance embedded processor. “You don’t want to have very high memory bandwidth,” said Cadence’s Desai. “Can you work with on-chip memory rather than going to an external DDR? That saves you power. Chip architects come up with different ways of saying, ‘In a low-power mode, I’ll be very optimum like this. And then when I go into high-performance mode, power is important, TOPS per watt is important, but now I can go outside the chip for a memory.’ People look at different ways to save power, and memory bandwidth is an important consideration, along with what memories are being used.”

Specific tradeoffs, again, depend on the target market. “In any higher-end processor, such as in the mobile space or in the surveillance market or the automotive market, there’s a CPU cluster, there’s a GPU cluster, then a domain-specific processor for the vision, or for the imaging, or if there is some kind of a radar. If there is some kind of communication, then there is a block for that. So at a high level, you have a pretty good idea of what you want. But the challenge comes in your overall memory architectures. How are you going to share between the processors? How are they going to communicate with each other — the L1, L2, L3 memory hierarchy, external DDR, all of these things? At a high level, people have a very good idea. It just gets down into more detail later on, based on which domain and what you are targeting for this design,” he said.

Verification considerations

Once the design of the high-performance embedded processor is complete, it must be verified. There are a few aspects to this.

“First, you’re verifying that the performance is what you expected,” said Neil Hand, director of marketing, design verification technology at Mentor, a Siemens Business. “Let’s say you’ve designed something, whether you’ve added accelerators, whether you haven’t added accelerators, new instructions, memory architectures, and all that. You need to figure out if you’ve met the performance goals. It is necessary to enable this by giving customers the ability to run real-world benchmarks on those processors, their actual applications, with the software stack on top of virtual platforms, as well as emulation, then switch between them to run real-world performance benchmarks.”

After understanding if the design is doing what’s expected, is it doing what was planned on the performance side? “You put all this effort into building a high-performance processor, and the only way you’re going to validate it is with real world data because that’s all that’s going to matter,” Hand stressed.

Hybrid verification platforms are used today to switch between fast models and highly accurate models of the processor in order to do the benchmarking, and that needs to be combined with real-world software stacks.

However, while this will indicate if the performance goals were met, it will not show if there are latent performance issues hiding inside, he said. “You may have issues where you’ll get caching problems, or you’ll get excessive latency on the buses under extreme circumstances. For that, what you need to be able to do in the verification environment, which is most likely going to be a combination of a hybrid platform or emulation, is perform system-level analysis. This allows for the transactions on buses to be correlated, or to be able to look at latency across the system. This will highlight latent problems in the processor that may not affect you now. But if suddenly you find there are certain outliers when you’re doing system-level analysis while running your real-world data, and you realize you occasionally get a cache miss and it gets hung up for longer than it should, suddenly there’s a performance issue.”

For high-performance processors that are used in real-time systems, excessive latency can be catastrophic for the entire system. “That’s the second part of the verification challenge of high-performance embedded processors — making sure you’re doing system level analysis and performance profiling,” Hand said. “It’s not just brute force performance that you would normally do by running benchmarks. It’s looking at what’s happening inside the system, looking at the cause and effect, the correlation between transaction sources and syncs, in order to understand whether there are some latent performance issues that haven’t necessarily caused a problem yet but will come back to bite you later on.

System level analysis becomes critical in heterogeneous designs because high performance processors do not live in isolation. Typically, they share infrastructure with other high-performance processors and accelerators accelerators. “You’ve got to be able to run everything in that system context,” he said. “You’ve got to do the analysis in that system-level context in order to understand if the design requirements have been met, and if there may be something that needs to be investigated, because it may become an issue later on.”

Conclusion

For the design of high-performance embedded processors, all of the traditional consideration still apply for performance, power, and area. But it gets much more complicated from there, depending upon the application, the system in which those processors are embedded, and the various tradeoffs that need to be made. Understanding all of this, monitoring it, and verifying everything works as expected under all known and expected conditions is a growing challenge, and it’s only getting harder as more data is processed using the same or less power than in the past.

Leave a Reply