Functional verification is going through many changes, and one of EDA’s leading investors sees lots of new possibilities for startups.

Jim Hogan, managing partner of Vista Ventures, LLC, is perhaps the best-known investor in the EDA space. Recently, he has been focusing time and attention on verification startups, including cloud technology company Metrics, and Portable Stimulus pioneer Breker Verification Systems. This adds to his longer-term commitment to formal verification with OneSpin Solutions. These companies are part of a plan than Hogan calls Verification 3.0. Semiconductor Engineering obtained an exclusive early draft of this plan and shared it with leading EDA companies to gauge their reaction.

The migration to Verification 3.0 is well underway, and Verification 2.0 quickly will become relegated to simpler tasks for increasingly smaller fractions of lagging-edge designs.

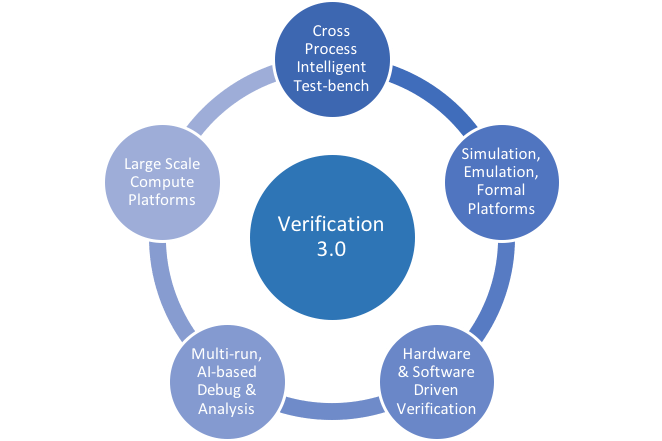

Never have so many changes converged in such a narrow time frame, making a new verification methodology essential. Verification 3.0 has five foundations, not all of which are fully developed today. Included in that list are the continuum of verification engines, the era of large-scale computing, the intelligent testbench, the merging of hardware and software, and the expanding role of verification.

Each of these has multiple facets, and while some themes may sound familiar, each one is seeing significant transformation.

Fig. 1: Verification 3.0. Source: Jim Hogan, Vista Ventures, LLC.

Continuum of verification engines

Verification 1.0 was dominated by the simulator running on individual workstations. Verification 2.0 added emulation and formal verification as mainstream technologies, making use of simulation farms. Early work toward Verification 3.0 has seen the creation of hybrid verification platforms combining the best of simulation and emulation, with formal accelerating components of the flow.

Verification 3.0 goes a lot further, however. FPGA prototyping and real silicon must be brought into the continuum of verification engines, and the way in which these technologies are delivered must change. No longer will companies own and maintain all the compute resources necessary to meet peak demand, which means that distributed and Cloud-based models will play an increasingly important role.

This requires rearchitecting the engines, changing the business model and rethinking aspects of the verification flow.

The intelligent testbench

The testbench has gone through several transitions. For Verification 1.0, directed testing was the primary method used. This was high in human costs, so Verification 2.0 saw the introduction of automation, the culmination being SystemVerilog and UVM driving a constrained random approach, proven to be of decreasing value with increasingly high compute costs.

Development of Verification 3.0 started about 10 years ago with what Gary Smith of Gary Smith EDA termed the Intelligent Testbench. Today, we are seeing mature tools in this area and the imminent release of the Accellera Portable Stimulus Standard, which will enable portability of test intent among vendors.

For the first time, the chip design world is witnessing a true executable intent specification driving the entire verification process. Many aspects of the flow, including debug, will be transformed.

Merging of hardware and software

A typical design during Verification 1.0 was a block that was likely a peripheral or function attached to an external processor. During Verification 2.0, continuous integration saw complete systems being integrated onto a single piece of silicon, the complexity of which was only constrained by Moore’s Law. Today, that is slowing down. As a result, companies no longer can rely on scaling to pack in more functionality. They must start getting a lot more creative.

A typical chip contains a significant number of deeply embedded processors that rely on firmware to provide their functionality. The system integration task is bringing together many of these firmware engines along with general purpose CPUs, GPUs and, increasingly, FPGA resources into the platform, all of which need to be able to work together to provide complex functionality. SoC verification is shifting from hardware to software-driven verification where tests are built into C code running on the processors.

Portable Stimulus has a large role to play here. Verification at this level is not just about hardware execution (e.g. cache coherency), but also software functionality.

Expanding role of verification

Up until Verification 3.0, the only thing that most teams had to worry about was functionality. Today, that is often the easy part of what the verification team is tasked with. Teams are taking on additional roles in power verification, performance verification and, increasingly, safety and security requirements. Systematic requirements tracking and reliability analysis are central verification tasks for many designs. Path tracing through the design becomes a core verification necessity. Formal has an expanding role to play, as well. In addition, it is a technology that can offload tasks from dynamic execution engines and prune the state space that tools have to cover. Design debug and profiling, together with multi-run analysis, are moving up in abstraction in an attempt to leverage artificial intelligence on large data sets to reduce the extensive manpower required. The industry does not have all the answers to this leg yet, but some of the underpinnings are being put in place.

No one company can tackle the entire Verification 3.0 challenge. Traditional EDA companies have a role to play, particularly in foundational technologies such as emulation and FPGA prototyping platforms, which require a capital investment that is beyond the means of startups.

But startups have much more innovative ideas and the ability to turn on a dime in response to market needs and business models. These are the companies most likely to spearhead the direction that the technology takes, and Verification 3.0 will see greater cooperation among them. Additional technologies are becoming available, such as machine learning, and these have a large role to play in debug, verification analysis, bug triage and many other aspects of the flow.

Industry reaction

The response from the industry has been muted.

“I didn’t get answers and I’m disappointed,” says Magdy Abadir, vice president of corporate marketing for Helic. “The discussion on the expanding role of verification reads like the overview of any verification conference (expanding verification tasks: power verification, formal verification, EM verification, security verification, debug, etc.) and FPGA prototyping has been around for ages. Saying that there are (and there will be) new architectures in these types of engines is pretty expected in every hardware or software we use.”

Frank Schirrmeister, senior group director for product management and marketing at Cadence Design Systems, agrees. But he does see value in putting such a statement together. “There is nothing groundbreaking or new in here, but it is a nice thinking model and structure. It provides a framework for him to bring together the different companies in which he is actively involved.”

One area in which Schirrmeister is not completely in agreement is the statement that ‘Verification 2.0 quickly will become relegated to simpler tasks for increasingly smaller fractions of lagging-edge designs.’ “If you look at the number of design starts, there is a clear bifurcation in trailblazing designs at the very high end, and there is a growing number of smaller designs, especially the smaller IoT type designs, which may not be an increasingly smaller fraction as stated. The question becomes, ‘Which technologies of Verification 2.0 or Verification 3.0 do they need?'”

Indeed, it is likely that Verification 2.0 will remain the cheaper solution for some time. “SystemVerilog constraint solvers are reasonably commoditized,” points out Mark Olen, product marketing group manager for Mentor, A Siemens Business. “They are essentially free because they are bundled into the simulators. (For further commentary on this see Commoditizing Constraints.) It will be several years before Portable Stimulus tools become free.”

The other factor is that legacy has a very long tail. “Not everyone is in agreement about what will come out of Portable Stimulus,” says Harry Foster, chief scientist at Mentor. “In the long term it provides a clear separation between the ‘what’ and the ‘how’. The what defines the intent, and the how can happen through automation. A company that has a lot of legacy associated with their older IP will have to think carefully, because it will cost them to come up with a new solution to make the how work.”

Another possibility is migration in the extreme. “Logic tells me there is a good chance that testbench 3.0 could supplant and obsolete 2.0, but I have seen people burned so many times presuming the future,” adds Olen. “We presumed that once SystemVerilog came out that proprietary languages such as e would go away. That has not happened. I would build something into a business model that assumes some people won’t be leaving constrained random testing behind.”

Hogan didn’t actually claim anything was new, but he did point out that the rate of change in verification tools and flows may be accelerating and that many of the technologies addressing these are converging. New methodologies would be required and precipitated by the technologies that are becoming available.

Schirrmeister doesn’t buy this. “I went back to the ITRS tables, and there have always been a lot of changes from the thin skinny engineer to IP reuse because ESL wasn’t ready. The rate of change has always been fast, and it is hard to see if it is any faster than in the past. Looking back, the number of changes we did in verification over the past 20 years has been huge. It is not that anything happened suddenly, and verification had to change. It always has been in transition with the adoption of new requirements.”

One part of Hogan’s view is for closer integration between hardware and software. The industry has been looking to enable this for more than two decades, with only limited success. Mentor acquired Microtec Research back in 1996 thinking that the two would move closer.

“There are a couple of reasons why EDA hasn’t really moved into software yet,” points out Olen. “I am talking about software development and debug, and not verifying system-level hardware with software. We haven’t moved into verifying software, and the main reason for that is speed. Software developers will not run on something as slow as an emulator. They don’t even like running on an FPGA prototype. They want to run on silicon at full speed. The other challenge is economics. The culture in the world of software is that tools should be free. When we bought software development tools we quickly learned that it is an entirely different business model, and if you try and sell a product for more than $995 you will have a challenge.”

Cloud adoption within EDA, meanwhile, has gone through several fits and starts. “The use of cloud computing is happening everywhere in our daily lives,” says Abadir. “There are IP concerns that are slowing this trend in the EDA/verification space, and I didn’t read anything that addresses this or offer relief.”

However, the migration to the Cloud may be inevitable. “Small- and medium-sized companies have to do this,” says Foster. “They have to verify all of the new layers of requirements to get the job done, and for them it is purely a cost benefit. The motivation for the big guys moving is that datacenter operating costs are 3X the actual capital cost of the datacenter. Their motivation is also cost, but for a different reason. I don’t see it as a technology challenge as much as business model issue.”

Olen adds, “The challenge is less on the engines and more on the platform implication. Where do you split the processing between local and Cloud when you are running regressions? How do you download debug data? How do you dump waveforms? These things can have significant performance impact. So while the engines do not change much, the handling of data and the splitting of tasks between remote and local is a challenge. Where do you collect coverage?”

While the industry may see little new in Verification 3.0, Hogan clearly sees that the existing solutions are not adequate for the industry and is willing to put money into new companies who believe they have a different and better way to address the problems. At the same time, it is also possible that the established companies already have, or are working on, their own solutions to these issues, which they have not yet made public.

Semiconductor Engineering readers have sometimes voiced their concerns in comments that EDA companies tend to protect what they have rather than investing in new technology. It is unlikely that existing EDA companies are standing still. Time will tell.

For more on Verification 3.0, OneSpin’s Sergio Marchese argues that coming changes to verification shouldn’t ignore the human factor.

Leave a Reply