Minimizing power consumption for a given amount of work is a complex problem that spans many aspects of the design flow. How close can we get to achieving the optimum?

Power has become a significant limiter for the capabilities of a chip at finer geometries, and making sure that performance is maximized for a given amount of power is becoming a critical design issue. But that is easier said than done, and the tools and methodologies to overcome the limitations of power are still in the early definition stages.

The problem spans a large swath of the design flow including software, architecture, design and implementation. It could be viewed as the ultimate example of shift left because in order to solve the problem, the highest level of software needs to be aware of the lowest-level details such as the layout of the hardware. It also has to cover timeframes large enough to understand the thermal impact of the execution of software. Without that connection in place, it is possible that bad decisions could get made with unexpected consequences.

Today, isolated pieces of a solution are emerging, with the hope that a complete flow may be possible in the future. Coordinating those activities is left to individual participants who work in multiple standards areas. Encouraging signs are on the horizon, but there is a long way to go before this becomes a reality.

Defining the Problem

Not all chips are affected equally. “In many applications there are large sub-systems that dominate power consumption,” says Drew Wingard, CTO at Sonics. “There may be a programmable sequencer or a small microcontroller, but its role is to set things up and let the hardware do whatever it is supposed to do. This is typically a smaller fraction of the overall power and the bulk of the energy goes into the data paths.”

Increasing numbers of systems these days include a growing array of processors, and that means software is intimately involved in power. “One has to reduce activity, exploit idle times, make use of sleep/low-power modes and carefully do voltage/frequency scaling,” explains Abhishek Ranjan, director of engineering at Mentor, a Siemens Business. “Designers also have been playing with the precision of their algorithms to reduce the amount of work that needs to be done.”

The slowdown in is increasing the attention in this area. “The cost of developing a chip on the latest process nodes is so high that the design must extract as much performance as possible,” points out Oliver King, chief technology officer for Moortec. “Performance usually involves power optimization, which means reducing the power consumption as much as possible to perform a given function. Traditional techniques leave too much potential on the table, and so adaptive voltage scaling and dynamic frequency scaling techniques are being adopted by a much wider part of the industry than was previously the case.”

This means that power consumption affects software scheduling due to thermal limits of the chip. This completes a complex cycle in that software performance is directly impacted by the thermal management circuitry on a chip, which is dependent on the total activity levels over a significant period of time.

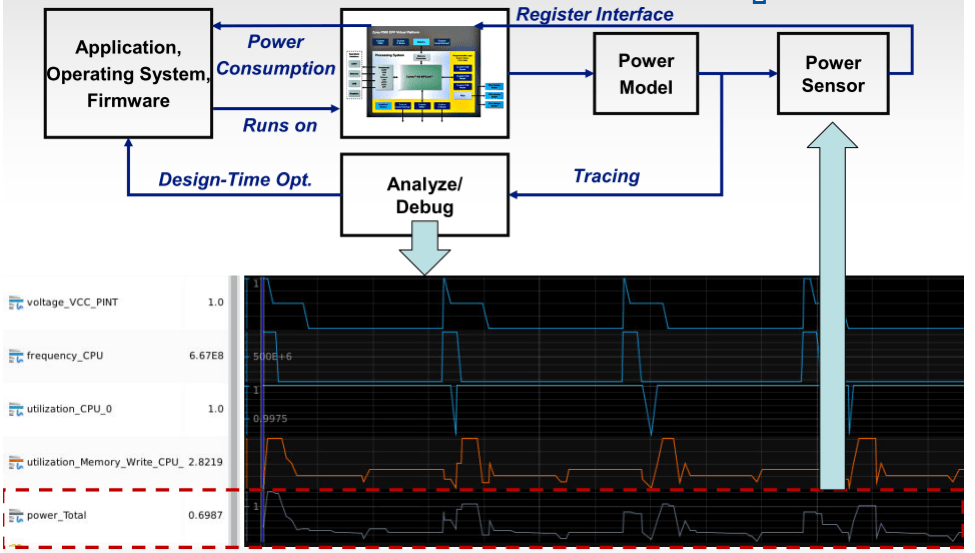

Figure 1: Multiple loops associated with system-level power optimization. Soure: Imperas.

The status today

Today, tools concentrate primarily on the power infrastructure and low-level optimization. “High-level decisions impose a power-management infrastructure (power domains, islands etc.) and within the confines of these domains fine-grained strategies can provide significant improvement on power,” explains Ranjan. “Techniques like sequential clock gating, operand isolation, circular buffers, flop sharing, retiming, re-pipelining and memory gating can provide significant power savings and are independent of the power-management decisions.”

But that leaves a large gap between SoC implementation and software. “It is not possible to do system-level power analysis using detailed implementation methods,” points out Tim Kogel, solution architect for Synopsys. “With implementation tools you can only do it for a very short period of time and for a small piece of the design, such as an IP block. At the system-level we have to look at the whole thing executing product-level use cases. What is the application doing over a longer period of time? That requires methods such as early specification, virtual prototypes for architecture definition or virtual prototypes for software development where you can make the software developer aware of the impact of the software on power consumption. Later it may be prototyping using FPGAs where you can again run real software use cases for a longer amount of time.”

Both are necessary. “If you don’t shut-down an unused block, no amount of fine grained clock-gating can compensate for the power overhead of that high-level mistake!” admits Ranjan. “Even if you have done power-intent planning at a high-level but do not clock-gate the registers enough, you will still have power issues!”

Feedback loop

One of the big problems is accuracy. “You can synthesize down to the gate level and annotate from a .lib file to calculate the power consumption as activated by the software or you can use RTL power estimation tools to estimate what the implementation would be,” says Frank Schirrmeister, senior product management group director in the System & Verification Group of Cadence. “Some people do annotation of power information to virtual platforms. Now you have a block that may be in different states and you know the power consumption in each state. This provides a high-level view of power consumption. We can also measure the activity locally at the emulation level, which also provides a relative assessment. You can optimize a couple of things, including how many memory accesses your software makes, and memory power consumption can be significant because it goes off-chip.”

It is impossible to estimate power without including low-level information. “Models should be aware of the target technology,” says Ranjan. “Typically, this means a lot of pre-characterization and calibration effort would be required to achieve some reasonable accuracy. However, models tend to be grossly wrong, and in general they are only marginally better than rule-of-thumb or experience based estimates. Any system-level decision based on crude models can backfire and adversely impact power. It has been shown that RTL is the first stage where power numbers can be generated with reasonable accuracy (within 15% of backend power numbers). However, getting RTL early at system design stage is not feasible.”

Does this mean that early power analysis is useless? “People have a power budget, and using a virtual model the requirements can be annotated,” says Schirrmeister. “That provides a first level of understanding of power consumption. You refine this as you get more data, or from data produced from previous projects. You want to avoid a software bug where you forget to switch off things in the chip that may cause overheating, so you also have some built-in security to make sure the power and thermal budget will never be exceeded.”

And that creates a complex feedback loop, which involves finding the maximum power and thermal scenarios. “The realistic worst-case power means you know how much power each component consumes in each operating mode, and a scenario that determines which component is in which state at any moment in time,” explains Kogel. “What is the relevant set of scenarios? That is a non-trivial exercise.”

Again this can be dependent on very low-level variation. “I could run assuming best case transistor conditions, and if my transistors have more leakage or I am running hotter, then one or more temperature sensors around the chip will provide an alarm when it is getting too hot,” says Wingard. “As a result, I will throttle back performance to stay within the thermal limit. This is a feedback loop that defines the worst-case power dissipation as a side effect of the highest temperature that it is safe to run the die at.”

How can you estimate maximum performance without doing this analysis? “Software teams or architects have to select which OS power governor should be used and the thresholds at which you want to scale up or down performance,” explains Kogel. “You are trading off power and thermal issues against performance. If you turn down too early you may lose out on benchmarks, but if it gets too hot you may have a disaster on your hands. We have to make the architect and the software developers aware of the power and thermal aspects. The dilemma is that you are making big-ticket decisions with very little information. Power and thermal is dependent on physical implementation parameters and the modeling of these is still emerging.”

Schirrmeister agrees. “It would be nice to have a pre-silicon model that would enable optimization of software, but in reality we are just watching when thermal effects kick in. This makes scheduling a lot more complicated.”

For real-time systems that can be a significant issue. “You can statically allocate the real-time processes onto a different processor, then move the more dynamic processes—where you can afford to slow down—to other processors,” says Wingard. “Many times, they have a best-effort service model.”

Emerging solutions

There are several isolated standards efforts today that are looking at aspects of the problem. “I don’t think there is a master plan, but the plan is obvious in a sense that hardware/software integration people need one big system model that would represent everything,” says Schirrmeister. “And we all know, and have learned the hard way, that the universal system model does not exist. It is too complicated to create.”

One piece of the puzzle may emerge from standard in development that is not even being targeted towards the problem today. “Portable Stimulus is geared towards functional verification at the moment,” says Kogel. “For power, it can make sense to use its definition of a scenario that can be used throughout a flow. It defines an activity profile, or workload graph, and this can be run on a VP for architectural power analysis. It can be used to define which types of low-power features make sense and the power domains and power management strategy. Today, all of that information is lost. Later you try and reproduce it with real software, and that is a scary phase. When the chip comes back, the software starts to run, and you find out the power numbers are not close to what was specified. By using Portable Stimulus and keeping the scenario description you can verify the power scenarios at each level and run these on a physical prototype and even real silicon. This helps to have a seamless verification process to ensure that the power consumption for key scenarios is always within the specified range.”

But Portable Stimulus may go much further than this. “Portable Stimulus is the first standard that provides a model of verification intent,” says , CEO of Breker. “That intent is modeled using graph-based notions, which could be annotated with all kinds of information. Today, we can generate a theoretic schedule for multiple tasks running on the embedded processors within an SoC. After simulation, we can annotate the actual timing achieved by the system. The same thing could be done for power, and this could help to identify the highest-power scenarios that could be used to drive the rest of the flow.”

When two executable specification exist, one notionally represents the design specification and the other the requirements. Finding the right place, or places, for each piece of information becomes important. “We want to enrich the prototype, either virtual or physical, with power information,” says Kogel. “This is standardized in UPF today and contains the concept of component-level power models, which is an overlay to an environment that models system execution. The power model observes the activity of each component in the system while executing a use case. Early on that could be an abstract scenario but later when software becomes available, it could be the real software.”

Another standard, IEEE P2415 which aims to provide a unified abstraction layer in an attempt to bring what Advanced Configuration and Power Interface (ACPI) does for the server market into the embedded systems world. “It provides a standard view of the power capabilities of all of the hardware blocks,” explains Kogel. “This gives an intermediate layer between the hardware and the high-level OS power management. That is a large productivity boost for the software developers as it enables some automation for manipulating the low-level power state machines. When the OS says change the voltage to this, or power down this component, there are a lot of hardware signals that need to wiggle before that actually happens. This is a complex and error prone task. This standard will help to make all of the hardware capabilities accessible to the software.”

Today, most design teams have to rely on their experience and on the results of the previous generation of silicon. However, in a world where blindly following Moore’s law is over, this may not be enough. Finding better solutions may require much better analysis and optimization tools that span the entire development flow.

Related Stories

Implementation Limits Power Optimization

Why dynamic power, static leakage and thermal issues need to be dealt with throughout the design process.

Optimization Challenges For 10nm And 7nm

What will it take to optimize a design at 10nm and 7nm? The problem gets harder with each new node.

Power Limits Of EDA

Tools aid with power reduction, but they can only tackle small savings in a locality. To do more would require a new role for the EDA industry.

Brian, you might to explore power distribution network analysis. All “HW” regardless of IC, Package or PCB, must be supplied with a power network. The large RLC network with sinks and sources simulated via Freq Domain will show anti-resonances where a target impedance is exceeded AND if these anti-resonances are within the normal operating frequencies will cause significant power dissipation at those frequencies. All the other power logic optimizations are using this grid. Construct a very good PDN foundation then start your various logic optimization/gating as well as changing application code. You could do all of the above correctly but if that PDN grid is poorly designed with high impedance, you will still have issues.

Prof. Swaminathan has written a book that discusses PCB/Package Power Integrity and then a second book about 2.5/3D design/analysis and modeling. Both excellent books.

While this relates to getting the necessary power to the parts of the chip as needed, t does not address what throughput may be possible for a given amount of power and if that is sustainable over time. These are the problems that have to be solved using a combination of both hardware and software and today we have very few tools that can help.