Interconnects, bonding and the flow of data in advanced packaging.

The best way to improve transistor density isn’t necessarily to cram more of them onto a single die.

Moore’s Law in its original form stated that device density doubles about every two years while cost remains constant. It relied on the observation that the cost of a processed silicon wafer remained constant regardless of the number of devices printed on it, which in turn depended on lithographers’ ability to maintain or improve throughput in wafers per hour as the number of fields per wafer increased.

For several years now, that premise has ceased to be true. Multiple patterning schemes and EUV lithography have driven the per wafer cost of leading edge process technologies up. The number of process steps is increasing, process throughput is lagging behind, and the end result is that a wafer with 28nm features costs less than one with 14nm features.

At the same time, the rise of cellphones, tablets, and the vast array of Internet of Things devices is making integrated circuit products more diverse. While scaling still offers clear advantages for memory and logic, an increasing fraction of most system designs is made up of sensors, transceivers, and signal processors of various kinds. These components consume relatively large amounts of silicon area and do not see the same benefits from smaller process nodes. A sensor’s minimum size is driven by the physics of the sensing operation, for example. Transferring these devices to smaller process technologies offers few performance benefits. It can even degrade yield, which depends on device area and the number of process steps.

In a nutshell, this is the argument for 3D integration. Each component of a system can be fabricated using the process technology most appropriate for its specific function. Sensors can use simpler processes and more relaxed lithography, memory need not be process-compatible with logic, and so on. The whole assembly then can be combined into a single package, minimizing interconnect length and system footprint.

Yet any deviation from the conventional system-on-chip paradigm immediately raises the question of partitioning—what components belong together and which should be processed separately? And what do you mean by “3D integration,” anyway? In some cases, for instance with optoelectronic components, the choice is easy. But usually, as Eric Beyne, Imec’s 3D program director explained, it is inextricable from a larger discussion of the system design and topology. How do data and instructions flow through the system?

The package is the partition

Any circuit is a hierarchy of components connected by wires. Rent’s Rule reminds us that the number of connections to a given element is defined by the number of components it contains. The package design, then, is defined by the required number and density of connections among the included components.

The lowest connection density is seen in traditional single-chip packages, which have no internal connections at all and connect to the circuit board through wire bonds or an array of solder bumps. The highest density, realized so far primarily in demonstration projects like Leti’s CoolCube, is monolithic 3D, in which several active device layers are stacked to form a single unified circuit. In between lie 2.5D packages with dice bonded to an interposer layer, and stacks with independent chips connected by through-silicon vias.

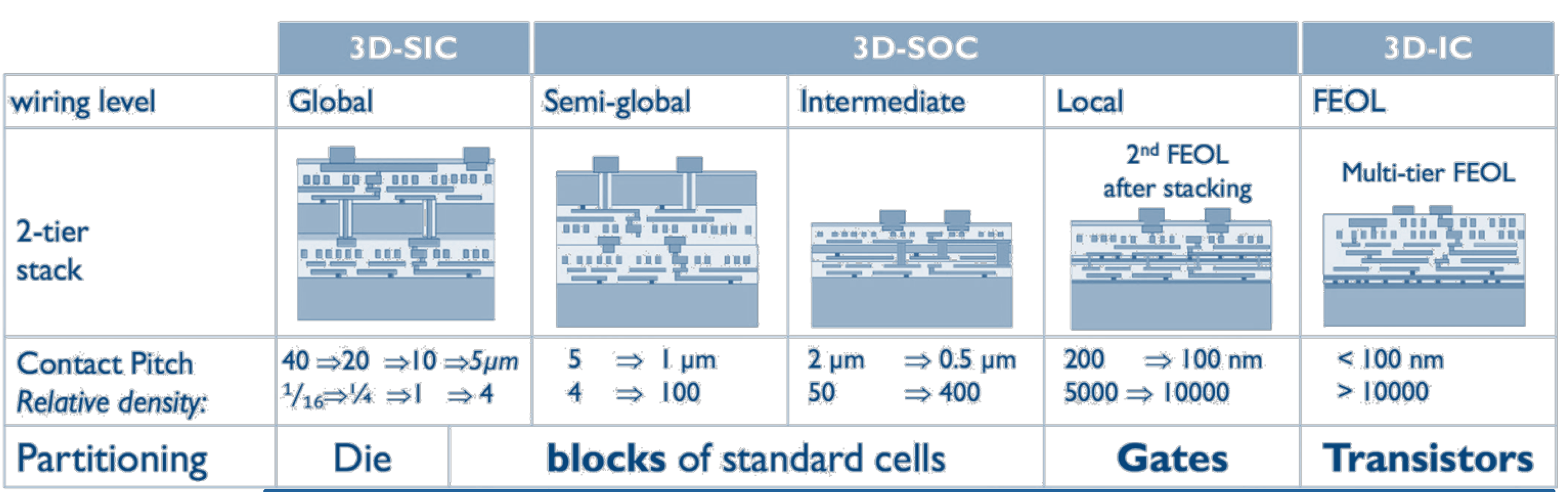

Fig. 1: 3D integration options. Source: Imec

Because of this diversity of packaging approaches, it is difficult to talk about individual process steps except in the context of a particular package design. However, all packages have some form of bonding between the components, and all need some form of thermal management.

The bonding approach, in particular, depends on the relationship between the components of the package. To use silicon area efficiently, the number of connections needs to scale with the size of the component, so a high bandwidth memory needs to support enough connections to service the memory array. It’s therefore not surprising that memory has been one of the first components to take advantage of chip stacking.

As Beyne explained, placing a memory array next to the logic core it supports gives an average line length that depends on both the spacing between the two devices and the bump pitch on the individual dice. If instead the memory is stacked vertically on top of the logic component, the average line length is simply the vertical spacing between the two. In an Imec test vehicle experiment, a lateral high bandwidth interconnect on an interposer had a 7mm line length between the two components, with 3.5 micron bump pitch. With a 2 Gbit/second signal rate, the device achieved 0.57 Terabits per second (Tbps) of bandwidth per millimeter of bus width. In contrast, by stacking a memory array on top of the logic, with a fairly relaxed 4 micron interconnect pitch, that same 2 Gbit/second signal led to a bandwidth of 125 Tbps per square millimeter.

Using wafer-to-wafer bonding, rather than conventional chip stacking, allows a tighter interconnect pitch and even further bandwidth increases. Functional partitioning of a multicore processor might put the memory cache directly above each individual core. Power and ground lines on the backside of the core logic might then connect to the device’s external interconnects. Moving the power distribution network to the backside would simplify the logic signaling connections on the front side, in itself an immediate performance boost. Finally, the complete computing module could be attached to an interposer along with communications components, an advanced compute-in-memory accelerator, or other elements.

The density of connections in a heterogenous package like this also determines what elements are manufactured at what facilities. Through-silicon vias and wafer-to-wafer bonding typically require the process and cleanroom capabilities of a conventional fab. Flip chip and wire bonding to a simple interposer are within the reach of most OSATs. In between, as signal redistribution layers become more complex and incorporate capacitors and other passive components, feature sizes begin to approach those of the upper interconnect layers in the fab, according to Thomas Uhrmann, EV Group director of business development. The process and cleanroom requirements fall into an intermediate realm, not as demanding as the transistor process but cleaner than conventional packaging.

After bonding, the next important challenge for all forms of 3D integration is cooling. Dingyou Zhang, member of the technical staff for quality and reliability at GlobalFoundries, observed that electrical proximity and thermal proximity go hand in hand. Heat dissipation issues are a major obstacle to acceptance of these integration schemes. In some designs, microfluidic channels in the interposer might allow convective cooling. More closely integrated components, like the stacked processor discussed above, might require a direct spray of either forced air or a liquid coolant. The package designer will need to be aware of potential circuit hotspots in order to design appropriate cooling systems.

And then what?

Of course, designing the package is only the beginning. Assembling it likely requires several temporary bonding and debonding steps, transferring singulated dice to temporary carriers and then to redistribution layers. Testing requires careful consideration of the cost exposure at each step, as well as an understanding of what functions can be tested in the unassembled state, and which can only be tested as part of the integrated system. Future articles will look at these steps in more detail.

Great article! I have a couple questions to clarify my understanding. Sorry if I am sweating the details.

“in traditional single-chip packages, which have no internal connections at all…” – Does “internal connections” include BEOL metal interconnects? What counts as “internal connections”?

“module could be attached to … an advanced compute-in-memory accelerator,…” – Is this research-level concept? I am not aware of any compute-in-memory products integrating into devices…

“requirements fall into an intermediate realm, not as demanding as the transistor process but cleaner than conventional packaging.” -Is it really the particle count that is the variable here or is it wafer flatness, resolution, etc… that is distinguishing BEOL and OSAT specs?

“More closely integrated components… might require a direct spray of either forced air or a liquid coolant.” -Is this a research-level concept? I haven’t heard of any cooling solutions integrated with the package…

Thanks so much!!!

Thank you for reading!

By “internal” connections, I mean connections between multiple pieces of silicon in the same package. The connections within the silicon chip aren’t considered. Connections between vertically stacked chips — such as a stacked memory module — are.

Yes, compute-in-memory accelerators are a research-level concept at this point, but one that is very much “front of mind” for the people consulted for this article. See this article for some background: https://semiengineering.com/challenges-emerge-for-in-memory-computing/

Resolution and particle requirements go hand in hand: you can’t allow particles that are bigger than the features you want to print. As noted, redistribution layers approach the resolution requirements of current BEOL layers, but are not nearly as demanding as the transistor process.

Yes, integrated cooling is a research concept.