As chipmakers search for the biggest bang for new markets, the emphasis isn’t just on process nodes anymore. But these kinds of changes also add risks.

Chipmakers increasingly are relying on architectural and micro-architectural changes as the best hope for improving power and performance across a spectrum of markets, process nodes and price points.

While discussion about the death of predates the 1-micron process node, there is no question that it is getting harder for even the largest chipmakers to stay on that curve. Case in point: The final version of the ITRS roadmap was rolled out this summer. In preparation for that, IEEE announced in May that it plans to create an International Roadmap For Devices and Systems, providing metrics that are relevant to specific markets instead of a one-size-fits-all roadmap based almost entirely upon device scaling. That was followed in June by an advanced packaging roadmap from IEEE and SEMI for systems in package.

Both of those moves, as well as many others, point to a growing reliance on architectures and microarchitectures to optimize power, performance and area, rather than just adding more transistors onto a die. And they put far more pressure on architects of all types—power, chip, system and software—to replace the gains once provided by device scaling.

“Architects used to be instrumental in defining a new family of chips,” said Mike Gianfagna, vice president of marketing at eSilicon. “Now they’re instrumental in defining new chips. The difference is that a new family of chips happens every few years. A new chip is every few months.”

There is clear evidence this shift is in well underway within big chip companies such as Intel, Samsung, AMD, and Nvidia, all of which have been wrestling with dwindling power/performance returns for several process nodes. At the Hot Chips conference last month, all of them uncorked new architectures that reduce feature scaling to just one more knob to turn in a design. An equal or greater emphasis is being placed on signal throughput to memory and I/O, including parallelization, lower power consumption, and how chips will provide competitive advantages for specific market uses rather than pitting the performance specs of one general-purpose processor pitted against the next.

Nvidia’s new Parker SoC is one example. The company is pitching as a compute platform for autonomous machines of all sorts. The device is built for throughput and speed. So while it includes a 16nm finFET-based CPU, with two 64-bit “Denver” ARM cores and four A57s, it also includes a number of other chips, including a 256-core GPU, display and audio engines, a safety engine, a secure boot processor, and DDR4 memory, which is just starting to be used in servers.

“This is optimized to run heavy workloads and use a battery,” said Andi Skende, lead architect on Nvidia’s new chip and a distinguished engineer at the company. Underpinning the chip’s signal plumbing is a triple pipeline with support for up to eight virtual machines using hardware-assisted virtualization. “Each virtual machine can control its own display.”

That virtualization is one part of the chip’s security, which Skende said is architected end-to-end.

Fig. 1: Nvidia’s Parker chip architecture. Source: Nvidia.

What’s new here is the emphasis on digital horsepower for automotive applications—a market where overall chip performance has not been a consideration in the past—combined with power management and security.

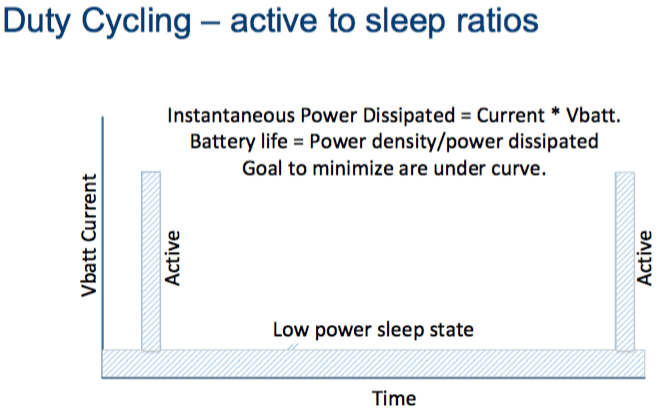

At the other end of the scale, Intel rolled out a new microcontroller chip for the embedded IoT market based on the Quark architecture and designed for “duty cycle use cases,” according to Peter Barry, principal engineer at Intel.

“This is designed to run up to 10 years off a coin-cell battery,” said Barry. “The power target is 1 milliwatt active power.”

Fig. 2: Intel’s MCU tradeoffs. Source: Intel.

Intel’s MCU is optimized for cost, performance and power at the same time, so it will likely have an average selling price of between $1 and $1.50, he noted. He said components such as memory were chosen on the basis of cost, which is unusual for Intel. “You also can get a 30% power improvement by switching to a lower (memory) retention mode.”

Samsung’s 14nm quad-core M1 with multi-stream pre-fetch, out-of-order instruction execution and a neural net-based prediction is another unique architecture for multiple purposes. Even AMD’s Zen architecture is a new twist on x86, with dedicated PCIe I/O lanes.

“We’re seeing a lot more smart data acceleration,” said Steven Woo, distinguished inventor and vice president of solutions marketing at Rambus. “We’ve been relying on Moore’s Law, which has outpaced every other curve for storage, networking and memories. But there is so much data these days. The bottleneck is now about moving that data. It may be better to move the processing to the data than the data to the processing. It’s smaller.”

The memory conundrum

Getting data in and out of memory is a huge issue these days at every level, which is why there are so many new memory types being introduced. Each has benefits, as well as drawbacks.

At the Flash Memory Summit in Santa Clara, Calif., last month, the buzzword was “storage class memory,” or SCM. While this term is still more of a wish-list item than a proven reality, it does point to a problem in system architectures today. It takes too long to get data from the processor to DRAM, and from DRAM to external storage. Solid-state drives are faster and use less power than spinning magnetic disks, but the existing technology is not robust enough to entrust the storage of valuable data.

While there are plenty of possible candidates—3D-XPoint, ReRAM, MRAM—there are tradeoffs involving performance, power, and economics. The latter depends on whether there will be enough backing of any one of these technologies to be able to achieve the same kinds of economies of scale that DRAM and spinning media have achieved.

But regardless of which memory type wins, the challenge of getting data in and out of that memory more quickly and efficiently has spawned some interesting architectural approaches. In addition to pipelining, which is commonplace in high-speed architectures, there also has been a significant amount of attention this year on silicon photonics.

Intel announced last month that it has developed a way to integrate a silicon photonics laser directly onto the chip using existing manufacturing processes, opening the door to much faster movement of data between and within systems. This is critical for overcoming memory bottlenecks using existing storage, providing architects with better options to optimize performance, power, and ultimately reduce the cost and overhead of moving large quantities of data.

That is by no means the only option, however. In fact, companies are experimenting with new ways to combine existing technologies to produce something entirely different.

“With storage-class memory, the goal is enhanced endurance and access time that is close to DRAM, but certainly faster than NAND with better endurance,” said Sheng Huang, director of technical marketing in Marvell’s storage group. “So we’re starting to see DRAM paired with non-volatile memory, and SSD paired with hard disk drives. From a user point of view, they see one drive, but it’s a hybrid hard disk drive. The hard drive also does caching, so you’re taking advantage of the locality of the data. With an Apple system, they have the software to do the caching and they know the OS file, so they can say this is the file to use. For the rest of the world, hybrid drives will be the next big thing.”

Still, a number of these options are new technologies. Kevin Ilcisin, vice president of product marketing at National Instruments, said there is plenty of evidence that big changes are underway in the market. “Semiconductor characterization is a large part of what we do, and we are engaged with a lot of customers about unique requirements. They need a set of tests done, and we support the ability to make those tests. But they also need to quickly change those test protocols.”

Yet even if each of these technologies is well characterized and proven in silicon, no one can identify all of the possible corner cases. Even something as straightforward a memory controller can cause problems. So for architects, this presents an unprecedented opportunity as well as a potential minefield.

Limiting risk

This helps explain why so many companies are starting out in older nodes, where the risk-benefit equation is better understood. The number of new options at older nodes is rising with new memory architectures and tweaks to existing processes. In fact, this is where the vast majority of chip architects are working today. And as the semiconductor market gap continues to solidify between those moving to finFETs and those sticking with planar transistors, the innovation at 22nm and above is rising as quickly as below 22nm. Rather than behaving like “fast followers,” the established node segment is undergoing significant leading-edge innovation of its own.

“People are working smarter to get more out of older nodes,” said , CEO of Teklatech. “The big problem with new technologies is that they’re so expensive you need huge volume to make sense. And if you look at different markets, there are different priorities. In automotive, robustness is becoming more important than performance. So people are turning to physical design, architectural design and apps. It’s the whole stack. The modern car of the future will be a supercomputer on wheels. But it has to work.”

Different substrates at existing nodes, such as FD-SOI, have received a fair amount of attention, as well. This is particularly true for companies where power is a huge concern, but finFETs are too expensive. GlobalFoundries today announced 12nm FD-SOI planar technology, which it claims will offer a 15% performance boost over today’s finFETs and up to 50% lower power consumption.

Also new on the FD-SOI front is an examination of how to extend body biasing to replace multiple cores. Wayne Dai, CEO of VeriSilicon, said the goal is to replace heterogeneous cores with a single core that can handle more than one function efficiently, whether it’s being used for light or intensive compute tasks.

Other areas of focus

While power and performance continue to drive many of the architectural decisions, security increasingly has a seat at the table—particularly for safety critical applications.

For some companies, this has become an area of differentiation. Anti-fuse one-time programmable NVM, for example, is finding its way into security applications, according to Lee Sun, field applications engineer at Kilopass, “It cannot be hacked using passive, semi-invasive and invasive methods. For chips that contain encryption keys or other unique identifiers, there needs to be a way to program each chip individually and securely. In the old days, anyone could buy an analog descrambler and get cable channels for free. These days, each cable box has a unique key that allows only verified subscribers access to the channels. These keys are encrypted and stored in the chip hardware.”

These kinds of details used to be handled further down the line in the design process. But as integration tightens to serve individual markets, even these parts are moving further up in the design process. That requires a lot more information to juggle, a lot more decisions to make, and a very good methodology for fixing mistakes as quickly as possible. While architectural innovation may be the way forward, there are still a lot of questions that will need to be answered.

Related Stories

Photonics Moves Closer To Chip

Government, private funding ramps up as semiconductor industry looks for faster low-power solutions.

Rethinking Processor Architectures

General-purpose metrics no longer apply as semiconductor industry makes a fundamental shift toward application-specific solutions.

New Architectures, Approaches To Speed Up Chips

Metrics for performance are changing at 10nm and 7nm. Speed still matters, but one size doesn’t fit all.

Dear Ed

Your insights and forecast on chip design strategies helps to promote FD-SOI devices adoptions of many applications and companies who are entering large market using these low power technologies such as IoT, ADAS, embedded AI, embedded supercomputer, so on.

thank you

best regards

kuwabara