There is no perfect memory, but there are certainly a lot of contenders.

The search continues for new non-volatile memories (NVMs) to challenge the existing incumbents, but before any technology can be accepted, it must be proven reliable.

“Everyone is searching for a universal memory,” says TongSwan Pang, Fujitsu senior marketing manager. “Different technologies have different reliability challenges, and not all of them may be able to operate in automotive grade-0 applications.”

Most of these new technologies fall into a category referred to as “storage-class memory,” or SCM. They fit into a range between bulk storage technologies like NAND flash and working memory like DRAM. There are at least four non-volatile technologies vying for this role, some of which have found some commercial success – and none of which seems poised to be the final winner at the expense of the others. Characteristics of these memories, as compared to flash, are easier and faster read and write operations, including byte addressability. In addition, reads and writes tend to be symmetric (or almost so), in stark contrast to flash memory.

Alternatives to flash have become more attractive, especially for memory embedded onto systems-on-chips (SoCs) and microcontrollers (MCUs). Below 28nm, the added steps for flash become more expensive due to high voltages, charge pumps, and many more masks. While some companies have pushed beyond this limit, the scaling doesn’t appear to be sustainable. Says memory analyst Jim Handy, general director for Objective Analysis, “NOR flash [typically used when embedding] seems incapable of getting to 14 nm.” This has increased demand for technologies using system voltages and requiring only 2 to 3 additional masks for embedding.

Three Main New Candidates – Plus One

There are three main contenders for novel NVM production, although there are more in early stages (some of which we’ll mention shortly). Confusingly, some refer to all three of these as “resistive memories,” because they all involve measuring a change in resistance to determine the state.

The oldest is phase-change memory (PCRAM or PCM). This is the old CD technology involving a chalcogenide material that crystalizes at one temperature and becomes amorphous at another. The two states have different resistances. A cell is programmed by raising it to the appropriate temperature. This is the technology that Intel and Micron collaborated on for their Optane “crosspoint” memory.

Fig. 1: PCRAM bit cell. Source: Image by Cyferz at English Wikipedia

The use of the word “crosspoint” in their branding can be confusing, since any of these memories can be configured as a crosspoint memory. There is a simple array of word lines and bit lines crossing each other orthogonally — similar to SRAM, but not to flash. When some people refer to crosspoint memory, they’re referring specifically to the Intel product. Others use the term more generally.

The next – and the farthest along as a memory technology – is magnetic RAM, or MRAM. Our focus here will be the current generation of MRAM technology, referred to as spin-transfer torque, or STT-MRAM. This involves two magnetizable layers sandwiching a tunneling material, referred to as a magnetic tunnel junction, or MTJ. One of the layers (the “pinned” or “reference” layer) has a fixed polarity. The other (the “free” layer) has a polarity that can be set by a current running through the cell. The resistance the tunneling current sees depends on whether the pinned and free layers have parallel or anti-parallel magnetization. MRAM has been available for some time in various guises.

Fig. 2: MRAM bit cell. Source: Cyferz Wikipedia, (public domain)

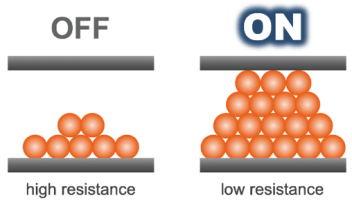

Finally, there’s resistive RAM (RRAM or ReRAM), whose name can be confusing given these are all resistive technologies. There are two main flavors of RRAM — one where material from the electrodes migrates in to create a conductive filament across a dielectric, and the other where oxygen ions and vacancies migrate around to create a conductive path (or not). This is a much more wide-open category, with lots of experimentation to find the best recipe.

“RRAM is attractive for the abundance of materials for the cell,” says Tomasz Brozek, senior fellow at PDF Solutions (a company that, while not a memory supplier, helps suppliers with characterization and evaluation for technology bring-up, exposing them to many of the low-level issues that must be worked out).

Fig. 3: Conceptual RRAM cell states. Source: Adesto

One challenge with all of these technologies is there is a certain intrinsic stochasticity to the programming mechanisms that isn’t present in flash or DRAM. Writing a value into a cell may result in the wrong value. This isn’t the fault of the cell itself. The next time, it may program perfectly. This becomes and issue that must be mitigated through accompanying circuitry like an error-correcting code (ECC).

There’s also a newer NVM technology from Nantero, called NRAM. This is made with carbon nanotubes (CNTs – the N in NRAM is “nanotube”). The programmable CNTs form a loose, undirected mass that, using temperature (delivered by current) or electrostatics, can bring those CNTs tighter together or further apart as they cross the van der Waals limit, causing them to be attracted to each other on one side or repelled on the other. This makes the programmed state stable. In the packed-together state, the CNTs contact each other and conduct. In the apart state, they don’t. Thus, again, the resistance becomes the parameter to read.

Fig. 4: NRAM bit cell. Source: Nantero

Because these technologies are new, they haven’t taken on a challenge that flash already has — multi-level cells. Each cell still represents one bit, either on or off. Multi-level cells also allow for intermediate values such that, for example, a four-level cell can store two bits’ worth of data. More recently, this has been taken to an extreme both with flash and with RRAM (and tried with others as well), using them as analog memory, with an almost continuous range of programming in the cell. This is beneficial for machine-learning applications using so-called “in-memory compute.”

Because multi-level and analog usage requires much greater programming and reading precision, “more and more memories are using write-verify,” says Brozek. This approach attempts to write to the memory, then backs off and reads, repeating until the desired level has been reached. This takes longer but gives a more precise result.

Each of these memories can be made either as a dedicated bulk chip or for embedding into SoCs or MCUs. As a bulk chip, the process can be tailored for maximum yield and reliability. When embedded, however, they must conform as closely as possible to the underlying CMOS technology, making them more expensive on a per-bit basis, but still requiring high reliability. Intel’s Optane is a bulk memory; it’s not clear that the technology would be appropriate for embedding. Both Brozek and Handy see MRAM vying with NOR flash as an embedded memory. RRAM is available as a bulk technology from Adesto, but it is also of broad interest as an embedded technology.

NVM Reliability Considerations

As NVMs, all of these technologies share reliability considerations with flash memory, in addition to the considerations that apply to all integrated circuits. Of particular importance are data retention and endurance. Neither of these is an issue for volatile memories like DRAM and SRAM. Ironically, they’re not an issue for DRAM because its data retention is so short that refresh circuitry is a basic DRAM requirement, making data loss a non-issue as long as power is maintained. In general, refresh is considered undesirable (although possible) in these new NVM technologies.

Data retention is a specification that indicates how long a memory will maintain its contents under all conditions – either storage or operation. With flash technology, a thin dielectric allows the passing of electrons into the floating gate under conditions of reading and writing. In theory, those electrons are then trapped in the floating gate, with no obvious way for them to leak out. But random electrons can slowly leak away either through thermal energies exceeding the energy barrier or by tunneling through the dielectric. Given enough time, enough electrons can leak away to make the cell state degrade.

Each NVM technology — and the new ones are no exception — has a means of data gradually leaking away. So data retention becomes a guarantee that, for some period of time, the memory will keep its contents. After that, it still may keep its contents for longer, but there’s no guarantee. Ten years has been a typical spec for flash, although automotive applications are pushing that requirement out, given the long lifetimes of cars.

Data retention is linked to the other basic NVM spec, endurance. Each time an NVM is programmed, some slight damage may occur. In flash, that would come as a result of electrons becoming embedded in the dielectric separating the floating gate from the rest of the circuit. Defects also may occur that can hasten the leakage of electrons off of the floating gate. Endurance is the number of times a memory can be programmed before the data retention falls below spec.

In theory, it’s possible to program a given device beyond its endurance, and it’s likely to continue to operate – but with shorter data-retention. Some devices may, however, count programming cycles and block programming beyond the limit. For many years, 10,000 cycles was the bar that flash memories were expected to reach. Nowadays, more and more memories are specifying 100,000 cycles.

These characteristics require mitigations outside the memory in order to maximize the life of a given chip. If data, for example, is concentrated in the lower half of a memory, then those cells may wear out, with the upper half of the memory remaining largely untouched. That is an inefficient use of the cells. Wear-leveling is a technique imposed outside the memory that moves the locations of data around to ensure that the entire memory is used, extending the life of the chip.

Wear-leveling isn’t a panacea, however, notes Brozek. “You can use wear-leveling for mass storage, but you can’t do it for [embedded] MCU NVM,” since it is primarily used to store code, which must be located in a known, fixed location.

All of these considerations will apply to any new NVM technology. For the most part, they must meet the levels at which flash operates and, if possible, exceed them. If endurance were boosted by many orders of magnitude, then wear leveling may no longer be necessary, although that decision is out of the hands of the memory-maker and under the control of systems designers.

When looking at reliability, “All of these [technologies] will be non-ideal,” says Brozek. “You characterize them and add circuit-level tools to manage them. Once you have mitigations in place, then there should be a black box” with the intrinsic issues neutralized.

Temperature Grades

Any wear-out mechanisms will become more pronounced the higher the temperature. Data retention and endurance specs assume constant high temperatures, meaning a device that sees occasional high temperatures with periods of respite will likely last longer. But there’s no practical way of specifying such a varying temperature profile, so data sheets specify the worst case.

But what does “high temperature” mean? For many years, there were two grades of integrated circuit — commercial, with a range of 0 to 70°C, and military, with a range of -55 – 125°C. These represented two very different businesses, since military-grade materials came with extremely stringent accompanying requirements. So some companies or divisions chose to focus on one or the other of those businesses. At some point, an industrial grade also materialized, with a range of -40 – 85°C. While industrial-grade products were not as difficult to produce as military-grade ones, they still tended to involve applications in equipment that was distinct from equipment using commercial-grade ICs.

This has changed with the advent of the automotive market, which arguably requires military-level robustness at commercial-level prices. Realistically, however, not all components in a vehicle must operate at the temperatures experienced near the engine. For this reason, there are now five temperature grades for use within automobiles. This is a fundamental change in that all of these grades might be found within the same piece of equipment.

Fig. 5: Automotive temperature grades. Temperatures are ambient. Source for numbers: AEC Q100 Standard

“Temperature is, in general, a big factor that affects electronic devices due to the change of thermal energy of electrons,” said Meng Zhu, product marketing manager at KLA. “For MRAM applications such as MCUs or cache memory, an operating temperature range of -40°C to 125°C is typically required. Higher ambient temperature can cause problems with data retention due to magnetic transitions above the anisotropy barrier and affect the readability of the device due to reduction of the tunneling magneto-resistance (TMR). All thermal processes used for the MRAM fabrication also need to be well controlled. An annealing process step is used to improve the crystallinity of the MgO/CoFeB layers, and as a result, to enhance anisotropy and TMR. On the other hand, too much thermal budget can lead to atomic inter-diffusion between the MRAM layers and degrade device performance. For example, Ta diffusion into the MgO barrier may degrade the crystallinity of MgO, while B diffusion out of CoFeB may alter the perpendicular anisotropy and affect TMR.”

A somewhat surprising temperature consideration is solder reflow. As Martin Mason, senior director of embedded memory for GlobalFoundries, describes it, each chip should be able to withstand five different solder reflow events. The first two occur when the chip is initially mounted on a board – one for surface mount, another for through-hole components on the same board. If rework is needed, then there will be one more to remove any faulty units and then up to two more to replace those units.

Each of these soldering cycles involves raising the temperature to the range of 220 to 270 °C for 10 to 15 minutes, according to Shane Hollmer, founder and vice president of engineering for memory at Adesto. “Many of these technologies struggle with thermal stability and data retention.” This becomes an issue for devices that are programmed during chip testing prior to being assembled onto a board.

Coming in part 2: What reliability challenges have been solved and which ones remain.

Related Stories

NVM Reliability Challenges And Tradeoffs

What’s solved, what isn’t, and why these different technologies are so important.

Non-Volatile Memory Tradeoffs Intensify

Why NVM is becoming so application-specific and what the different options are.

Leave a Reply