Market opportunities for shared resources balloon, but verification/validation remains a challenge.

CXL is gaining traction inside large data centers as a way of boosting utilization of different compute elements, such as memories and accelerators, while minimizing the need for additional racks of servers. But the standard is being extended and modified so quickly that it is difficult to keep up with all the changes, each of which needs to be verified and validated across a growing swath of heterogeneous and often customized designs.

At its core, Compute Express Link (CXL) is a cache-coherent interconnect protocol for memories, processors, and accelerators, which can enable flexible architectures to more effectively handle different workload types and sizes. That, in turn, will help ease the pressure on data centers to do more with less, a seemingly mammoth challenge given the explosion in the amount of data that needs to be processed.

In the past, the typical solution was to throw more compute resources at any capacity problem. But as Moore’s Law slows, and the amount of power needed to power and cool racks of servers continues to rise, systems companies have been looking for alternative approaches. This has become even more critical as power grids hit their limits and societal demands for sustainability increase.

Largely developed by Intel, and based on the PCIe standard, CXL provides an appealing proposition in the midst of these conflicting dynamics. Optimizing the way a data center uses memory can boost performance, while also reducing stack complexity and system costs. Specifically, CXL allows for low-latency connectivity and memory coherency between the CPU and the memory on attached devices, keeping the data across these areas consistent.

This is particularly important for high-capacity workloads, such as AI training, where more data generally equates to increased accuracy, as well as for large-scale simulations needed for increasingly electrified vehicles, smart factories, drug discovery, and weather mapping, to name a few.

The CXL Consortium, formed in 2019 by founding members Google, Microsoft, HPE, Dell EMC, Cisco, Meta, and Huawei — introduced the first version of the specification that year based on PCIe 5.0. Since then, AMD, NVIDIA, Samsung, Arm, Renesas, IBM, Keysight, Synopsys, and Marvell, among others, have joined in various capacities, and the Gen-Z technology and OpenCAPI technologies have been folded in. In August, Specification 3.0 was introduced, with twice the bandwidth, support for multi-level switching, and coherency improvements for memory sharing.

As far as standards go, this one is progressing remarkably quickly. And given the groundswell of support for CXL by companies with deep pockets, it appears very likely this standard will become pervasive. But its rapid evolution also has made it difficult for IP developers to quickly pivot from one version of the standard to the next.

Bullish outlook

“We should see this take off in earnest over the next few years,” said Arif Khan, product marketing group director for PCIe, CXL, and Interface IP at Cadence. He noted that the total addressable market for CXL-based applications is expected to reach $20 billion by the year 2030, according to some memory maker forecasts.

Others are similarly optimistic. “There are tons of customers adopting CXL for their next generation SoCs, for accelerators, SmartNICs and GPUs, as well as memory expansion devices,” said Richard Solomon, technical marketing manager of PCI Express Controller IP at Synopsys.

“Almost everyone is building their servers with CXL capability,” said Brig Asay, senior planning and marketing manager at Keysight Technologies. “Standards such as JEDEC have reached agreements with CXL to work between the standards and ensure operability. CXL has also received assets from Gen-Z and Open CAPI, which were offering similar capabilities as CXL, but CXL had strong staying power.”

Still, widespread adoption will take time, no matter how fast the standard progresses. Despite the attractiveness of shared resources, data centers are conservative when it comes to adopting any new technology. Any glitches can cost millions of dollars in downtime.

“While there is a lot of excitement around CXL, the technology is still in its early days,” said Jeff Defilippi, senior director of product management for Arm’s Infrastructure Line of Business. “For proliferation to happen, the solutions will need to undergo a rigorous functional and performance validation process with OEMs and cloud service providers before they see production deployment.”

Varun Agrawal, senior staff product marketing manager at Synopsys, observed that countless memory and server SoC companies have voiced support for CXL in the last three years. But bringing products that can fully support CXL topology and bandwidth to market is a slower process. “More and more designs are now choosing to adopt CXL for their PCIe datapaths through cxl.io, with the view of expansion in other types of devices. The proliferation of CXL in data centers in terms of product roll out has been slow, and one of the reasons is the lack of verification and validation infrastructure.”

The user community is increasingly looking for CXL transactors, virtual models and host solutions, in-circuit speed adaptors, and interface card hardware solutions as their first ask, while planning their verification/validation, Agrawal noted. “CXL exemplifies the software-first approach for companies looking to kickstart hardware-software validation, software bring-up, and compliance in parallel to achieve their time-to-market goals.”

System-level verification is also a requirement. “Depending on the features supported, the verification could span across memory features like resource sharing, pooling, and expansion; coherency between host and devices; security and routing; hot remove and add; multiple domains with different virtual hierarchies; and interconnect performance — especially latency for .cache and .mem,” Agrawal explained.

Popular attributes

So why is CXL being adopted, despite these gaps? Synopsys’ Solomon said CXL’s initial focus was on cache coherency, and the industry was intrigued by its asymmetric coherence protocol. It was only later that the focus shifted to addressing the limitations of traditional memory attachment and a DRAM interface.

“Now you’ve got this caching approach and this memory attach, and each of them is driving CXL into the data center in different ways,” he explained. “For AI and machine learning, SmartNICs, data processing units, add-on devices for servers focused on intelligently dealing with data in the server rather than the host CPU. Those folks are really interested in having a cache coherence interconnect. For hyperscalers, CXL creates a separation between processor and memory that allows for a more efficient allocation of resources between jobs that require different amounts of volatile and non-volatile memory.”

In addition, low latency, coherency across interconnects, and being able to support the hierarchal needs of memory for data center applications makes CXL attractive,” Agrawal said. “As it uses the existing PCIe PHY layer, interoperability helps drive early adoption and increases the product life cycle.”

This makes CXL ideal for data center applications. “CXL provides cache coherency for memory to access the CPU,” said Keysight’s Asay. “It also enables the pooling of memory resources, which is ideal because it increases the overall utilization of DRAM in the data center.”

While CXL has multiple use cases, Arm’s Defilippi said cloud providers are extremely optimistic about the ability to share memory capacity across a set of nodes and increase the GB/vCPU for key applications. “For cloud vendors it is cost-prohibitive to richly provision DRAM across all of their systems. But by having access to a CXL-attached pool of DRAM, they now can take systems with just 2GB/vCPU and assign additional DRAM capacity, making these systems more suitable to a broad range of workloads. For systems that are already highly provisioned (i.e, 8GB/vCPU), the extra CXL-attached memory now can make them suitable for applications needing massive memory footprints, like some ERP systems, that today might not run in the cloud. In this example, CXL becomes a gateway for additional workload migration to the cloud.”

The release of CXL 2.0 in November 2020 introduced memory pooling with multiple logical devices, which Cadence’s Khan said is a key improvement to the specification. “This pooling capability allowed resource sharing, including system memory, across multiple systems. While CXL was designed for accelerators, it supports a memory interface, as well. Tiered configurations also can support heterogeneous memories — high-bandwidth memory on the package, fast DDR5 connected to the processor, and slower memories over a CXL module. Memory is a significant cost item for datacenters, and pooling is an efficient way to manage systems.”

Fig. 1: CXL 2.0 introduced memory pooling with single and multiple logical devices. Source: Cadence

CXL and customization

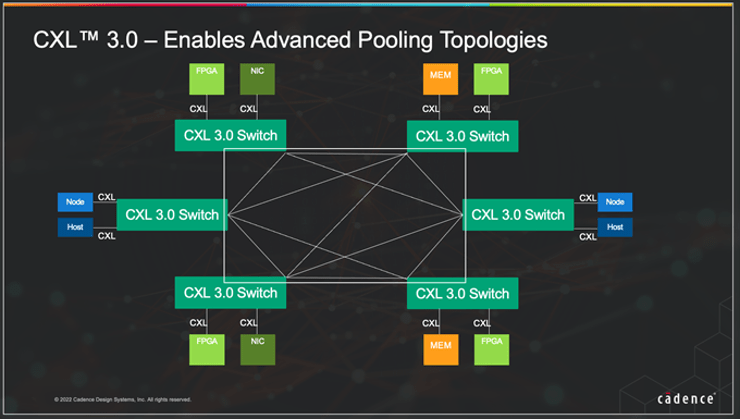

Last year’s introduction of CXL 3.0 takes things a step further, with fabric-like implementation via multi-level switching. “This allows for the implementation of Global Fabric Attached Memory, which disaggregates the memory pools from the processing units,” said Khan. “The memory pools also can be heterogeneous, with various types of memory. In the future, we can envisage a leaf/spine architecture with leaves for NIC, CPU, memory, and accelerators, with an interconnected spine switch system built around CXL 3.0.”

Fig. 2: CXL 3.0 offers fabric-like implementation with multi-level switching. Source: Cadence

This is relevant for data centers because there is not a one-size-fits all system architecture in the AI/HPC world.

Khan explained today’s servers provide a reasonable superset of what these applications might need, frequently resulting in under-utilization and wasted energy. “Heterogeneous applications demand very distinct solutions for optimized implementations. Common application workloads for HPC/AI/ML each have different system needs. The vision of disaggregated systems is to build large banks of resources: memory, GPUs, compute, and storage resources to build flexible, composable architectures as needed. In other words, CXL is paving the way for disaggregated and composable systems by enabling these features.”

CXL’s memory paradigm also opens the door to new custom CXL devices, such as pooled memory controllers.

“Another emerging use case would be heterogeneous computing, utilizing cache coherency within the CXL devices for memory sharing between the host CPU and the CXL attached device. The programming model here is still being worked out, but the goal is to be able to share larger datasets between the host and an accelerator, which is very appealing for things like ML training. For the host of custom AI chips in development and for GPUs/NPUs, this is could be an attractive option,” Defilippi said.

When it comes to CXL in custom chip designs for data centers, Keysight’s Asay noted that these designs must ensure interoperability with the CXL specification if they want cache coherence or to access certain shared memory resources. “A common custom chip design is the SMART NIC, where CXL has become very prevalent as a technology for transmitting data.”

Security matters too, and Synopsys’ Agrawal sees security features at the transaction and system levels likely to drive custom designs for data-sensitive applications, given the multiple companies working on their own application-level interface over CXL to optimize their designs.

Conclusion

There are other customization possibilities within the broader memory ecosystem as it relates to data centers and HPC, including combining open source standards to create new products.

Blueshift Memory is the U.K.-based chip startup behind an alternative memory architecture called Cambridge Architecture. The company is using RISC-V and CXL to deploy is technology. CEO and CTO Peter Marosan said using those open standards allowed the company to save $10 million in potential expenditures on an off-the-shelf CPU from a manufacturer, and “opened the gate to the market for us and our whole cohort.”

As for what’s on the horizon, Gary Ruggles, senior product marketing manager at Synopsys, said he’s beginning to see the first inquiries for both CXL 2.0 and CXL 3.0 from the automotive sector. “When you look at cars now, they’re roaming supercomputers. It shouldn’t be shocking that these folks are looking at the exact same things we’re looking at in the data center.”

Leave a Reply