The amount of power consumed by analog circuits is causing increasing concern as digital power drops, but analog designers have few tools to help them.

Analog circuitry is usually a small part of a large SoC, but it does not scale in the same way as digital circuitry under Moore’s Law. The power consumed by analog is becoming an increasing concern, especially for battery-operated devices. At the same time, little automation is available to help analog designers reduce consumption.

“Newer consumer devices, like smartphones and wearables, along with IoT nodes are resulting in an increasing number of sensors being built into these devices,” says Björn Zeugmann, member of the Integrated Sensor Electronics research group at Fraunhofer IIS’ Engineering of Adaptive Systems Division. “These devices also need to be very low power, and thus there is growing importance for power optimization within analog circuits.”

These are big markets. Joe Hoffman, director for intelligent edge and sensor technologies at SAR Insight and Consulting, provides some shipment numbers for battery-powered device over the time span of 2020 to 2025. “Smartphones, 11 billion; wearables, 2.5 billion; and portable speakers and remote-control devices, 2.2 billion.”

Optimizing analog for power is a tough problem. “Analog design is a very complex and multi-dimensional problem,” says Mo Faisal, president and CEO of Movellus. “If you want to optimize an op-amp, you have to optimize 20 variables in parallel. This is where human intuition becomes very important, and that’s where the analog designers are artists and have built that experience over the years.”

Analog designers care about two kinds of power. “They look at the peak power, and they look at average power,” says Faisal. “The reason for that is that peak current is what defines how you’re going to design your package and your power grid. Average power basically defines your power consumption and impacts battery size or other things for your chip.”

In some cases, total energy is the metric. “There’s a standard figure of merit for analog to digital convertors (ADCs),” says Art Schaldenbrand, senior product manager at Cadence. “The number they worry about is the number of Joules or the energy per conversion — how much does that cost. ADC designers and analog designers do understand energy.”

These devices often have tiny batteries or are required to run off a single charge for a long period of time.

“As a designer, be it digital or analog, you try to strike a balance between speed and performance,” says Farzin Rasteh, senior manager for analog and mixed signal verification at Synopsys. “You want to have higher throughput, faster chips and lower power. There are also considerations for reliability. The higher the current density, the higher the chances over time that the chip gets damaged because of electromigration (EM). That is an incentive to lower the power.”

Large SoC are often built in newer processes nodes that do not favor analog circuitry. “The trade-off between analog and digital power becomes a key system consideration,” says Eric Downey, senior analog IC design engineer for Adesto Technologies. “That ratio depends on the process node. Digital power scales with process node, but analog power does not scale to the same degree.”

The problem can be looked at in various ways. “There are different approaches exploited for minimizing the consumption of analog circuits,” says Armin Tajalli, SerDes analog architect for Kandou. “They include employing advanced circuit topologies and block-level optimization, proper choice of system architecture, and proper choice of signaling scheme that allows reducing circuit complexity, such as equalization.”

But no accepted methodologies exist for designing low-power analog circuits. “You have to potentially re-architect your circuit to meet your power requirements or make some other adaptations,” says Cadence’s Schaldenbrand. “They get these conflicting specifications wanting both higher performance and lower power, and they have to come up with an architectural design. That is very important because that’s where they’re making the decisions about how they are going to achieve this performance.”

Top-down or bottom-up?

As with many design problems there are multiple ways to tackle it. One approach is bottom-up. “In a bottom-up process, they pick a technology and see if that technology supports low power, lower voltages, or multiple voltages,” says Synopsys’ Rasteh. “When you design, you try to keep the transistors as small as possible, while not violating your timing and performance requirements. You hand it to the person who has to do custom layout. They use extraction tools to extract parasitics and bring it back to your design. That person also has to make sure power grids and power nets are wide enough to prevent overheating, to prevent EM issues, to lower IR drop and power dissipation.”

Many take a holistic approach. “One of the things you start with is looking at the whole picture to see what gets better,” says Schaldenbrand. “This is especially true as you move down the process technology process nodes, where the digital gets better. The question becomes, ‘How can I take advantage of that to make my analog performance improve?’ If you are designing an ADC, you might want to look at successive approximation ADC that were used a lot in the ’70s and ’80s and take advantage of the faster digital logic to replace pipeline ADCs.”

Many designers prefer a top-down approach. “The choice of system architecture is likely to have the biggest impact on power consumption,” says Fraunhofer’s Zeugmann. “For sensors, this means only having the sensors and data converters powered up when necessary. It means having systems that are adaptable so that during runtime they can optimize measurement speed, rate and quality. That means they only measure what’s really needed in different cases and avoids oversampling.”

There are many times when you have to look at all of these and make tradeoffs. “The designer has to make smart choices with the architecture, and the circuit topology to reduce current consumption,” says Nebabie Kebebew, senior product manager for AMS verification at Mentor, a Siemens Business. “This is often done by minimizing the supply voltage, by reducing circuit complexity, or minimizing clocking frequency. All of these contribute to power, and then they have to consider switching rates and the capacitance of the switching nodes. As a designer, they would be looking at various areas to minimize the power consumption.”

Technology constraints

One way to reduce power is to reduce voltage. “That’s a huge issue for analog designers, because traditionally the way to get gain is to stack up multiple transistors,” says Schaldenbrand. “But if you’re at 0.8 volts, you can’t stack more than two transistors. So now you have to come up with a way to get high gain without having the ability to stack devices, or you have to come up with a new architecture.”

New technologies introduce additional issues. “The more advanced nodes that you go to, the harder it gets because, first of all your device sizes are discrete,” says Movellus’ Faisal. “That is a challenge when you no longer have flexibility on your device size. Secondary and tertiary effects come into play. Short-channel effects come into play. Extra heating and aging come into play. All those things really exacerbate the problem statement of analog design.”

But perhaps the biggest issue is variability. “I get a wafer and I have a lot of variations on it,” says Rasteh. “Will those process variations demolish all of my assumptions when I designed the chip? Will timing and and power be thrown out of the window? The smaller the node, the more extensive your variation.”

And that can have catastrophic effects. “When you combine process variation with low power, a transistor that was behaving nicely at nominal is no longer working,” says Mentor’s Kebebew. “What happens is it goes into the saturation region. It can produce timing variations on a critical circuit path, or you can have smaller read/write noise margins for an SRAM memory cell. To address this, some designers build in margins so they can cope with the performance loss introduced by variation process, but this requires larger area and higher power consumption.”

Faisal explains how much worse this is becoming. “In the older nodes — older than 28 — the process variability from slow corner to fast used to be less than 2X. Now, in advanced nodes, we’re seeing more than 3X or 4X, depending on the operating region. Analog designs have to guarantee performance and functionality across PVT, and so you end up paying for that through power and area.”

That power impact can be significant. “In the design process, the extra power required to ensure a circuit performs within spec at an extreme corner can be 1.5X the power required for a nominal corner,” says Adesto’s Downey.

While technology makes some things worse, it can help in other ways. “There are some things that actually get better,” says Schaldenbrand. “It turns out that as you scale down, capacitor matching is pretty good. This is because the industry has transitioned from traditional metal-oxide-metal area-based capacitors to finger capacitors. The finger capacitors provide very good matching, even down to very small sizes, and don’t degrade with size.”

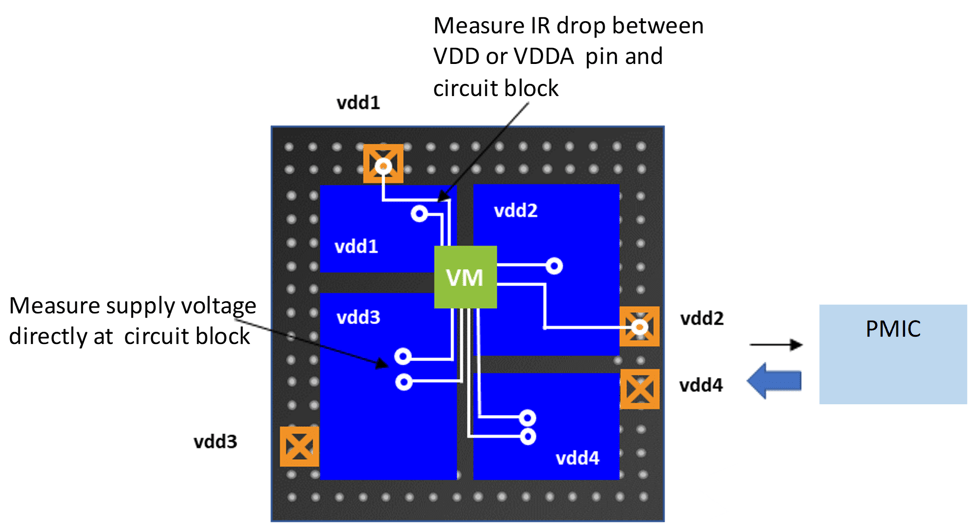

The ability to add sensors also can mean better control over voltage. “One important area where analog power can be optimized is to monitor the IR drops and control the PMIC supply voltages,” says Richard McPartland, technical marketing manager for Moortec. “With 2.5D and 3D packaging now mainstream, and with large power-hungry chips housing multiple die, it is challenging to design good power distribution networks. Embedding voltage monitors enable one to see and accurately measure the supply voltages at critical circuit blocks. Then one can optimize and reduce the voltage guard bands, saving power while ensuring reliable operation of both analog and digital circuits.”

Fig 1: Reducing voltage variation by controlling IR drop. Source: Moortec

Another power saving trend is to convert what were traditional analog circuits into digital. “Consider that power supply,” says Schaldenbrand. “How do you build a regulator that runs at 0.8 volts? One way is to digitalization it. Today there are all digital, low dropout regulators. They are just a comparator, applying the logic and power transistors, that get switched in and out to generate these power supplies.

The same is happening to the PLLs. “With a digital architecture you don’t have to precisely bias the transistor in saturation for eternity until end of life for the chip,” says Faisal. “The noise margins are high, so you can push the envelope in terms of performance, as well as eliminating some of these variation challenges and some of the biasing challenges, and even matching challenges.”

Some designers are looking to other power-saving techniques. “Sub-threshold design offers significantly reduced power consumption, but is not without challenges,” says Adesto’s Downey. “It may be necessary to redesign legacy circuits, simulation models may not be accurate for sub-threshold design, and circuits can be sensitive to leakage.”

And while some people are digitizing analog, the reverse also can make sense. “By using sub-threshold circuits for ultra-low power, analog machine learning chips can remain always on at very low power and can inference while the sensor data is still in its natural analog form,” says SAR Insight’s Hoffman. “This cascaded system architecture uses just a little power to determine data relevance before using more power on digitization and further analysis.”

Analog optimization

Unlike digital design, there are few tools to help with analog analysis and optimization. Most people rely on extensive SPICE simulation. “With FinFET, not only do they have to deal with process variation, but there’s a lot more parasitics to deal with,” says Kebebew. “Running the number of process, voltage and temperature corners required and the parametric variation becomes significantly more difficult. We’re talking about simulations in the millions.”

Thankfully, EDA tools have managed to reduce the number of simulations required. “Instead of running 10,000 random simulations and getting 95% confidence that you are within 98% of the margin of my spec, you can do the same thing with perhaps 200 runs, and not lose a lot of confidence,” says Rasteh. “You sacrifice a few percent of confidence for days of saved time in running simulations.”

Once the worst-case corners have been located, you then can look for potential optimizations. “You need to understand how sensitive each transistor is to process variation,” says Kebebew. “You need to handle the sensitivity of your analog circuit to process variation, because that’s impacting your power consumption. Optimization consists of running device size sweeping. By running these simulations, you get feedback on how your design is performing at the worst-case PVT corners that you captured earlier. That feedback, coupled with the designer’s insight, enables them to make tradeoffs to meet low-power consumption goals.”

Powering down

Digital circuitry aggressively powers down circuits that are not being used. “Power management techniques, such as the ability to dynamically power-down analog circuits when not needed, and quickly wake them up, can have a dramatic effect on the overall power budget,” says Downey. “Adding programmability to allow performance to be changed in different modes can help save power, although this adds to the design effort and test complexity.”

In some cases, one circuit is used while another is powered down. “Systems often place a device into a sleep mode while low-power sentinels watch for a trigger event,” says Hoffman. “Wake sounds and words will become more complex wake phrases along with contextual events. An example is a voice-first device, which can determine whether speech is present before wasting power digitizing the sound and turning on the higher-power wake-word engine/digital neural network for full keyword analysis.”

Variable performance could be a viable option. “Even when you’re sleeping, you still need the heartbeat,” says Faisal. “You need the clock to keep running, so that you can wake up when needed. But you may not need the multi gigahertz frequency that the main PLL was built for in sleep mode. In sleep mode everything is slower. You could reduce sleep mode power 10X, simply by adding a sleep-mode PLL instead of using the mission-mode PLL in sleep mode.”

Calibration

Another way to minimize variation is the use of calibration. “Digital calibration can remove the effects of variation and allow the designer to reduce the power consumed by the analog circuits,” says Downey. “Calibration techniques, which are performed in the foreground to optimize the analog operating point at start-up or in the factory, do not cost operating power. But they do have an effect on the design and test effort. Background calibration, which corrects the analog output continuously, can result in consumption of some extra digital power. As we go to smaller process nodes, the power of the digital calibration can be much lower than the power and area costs of a high-performance analog part. Analog design effort can be reduced, too. For example, if digital calibration allows relaxation of critical circuit parameters like matching, a design iteration post layout to fix these matching errors could be avoided.”

Conclusion

Optimization of analog is as much an art as analog design. Analog designers are constantly having to look at new ways to do the same task and to make improvements in the face of mounting challenges. However, while a few bright spots exist, they do this with tooling that has basically remained unchanged for decades.

Excellent article – more advancements in designing analog components in digital processes needed.

Very nicely written

Interestingly, Verilog-AMS, which is about the only standard language for doing verification of analog and power stuff, is also good for digital sign-off in high-variability Silicon (US8478576B1).

It’s a shame that Verilog-AMS has been ignored by the EDA companies, but that seems to be creating an opportunity for an open-source solution like Xyce.