Semiconductor aging has moved from being a foundry issue to a user problem. As we get to 5nm and below, vectorless methodologies become too inaccurate.

The mechanisms that cause aging in semiconductors have been known for a long time, but the concept did not concern most people because the expected lifetime of parts was far longer than their intended deployment in the field. In a short period of time, all of that has changed.

As device geometries have become smaller, the issue has become more significant. At 5nm, it becomes an essential part of the development flow with tools and flows evolving rapidly as new problems are discovered, understood and modeled.

“We have seen it move from being a boutique technology, used by specific design groups, into something that’s much more of a regular part of the sign-off process,” says Art Schaldenbrand, senior product manager at Cadence. “As we go down into these more advanced nodes, the number of issues you have to deal with increases. At half micron you might only have to worry about hot carrier injection (HCI) if you’re doing something like a power chip. As you go down below 180nm you start seeing things like negative-bias temperature instability (NBTI). Further down you get into other phenomena like self heating, which becomes a significant reliability problem.”

The ways of dealing with it in the past are no longer viable. “Until recently, designers very conservatively dealt with the aging problem by overdesign, leaving plenty of margin on the table,” says Ahmed Ramadan, senior product engineering manager for Mentor, a Siemens Business. “However, while pushing designs to the limit is not only needed to achieve competitive advantage, it is also needed to fulfill new applications requirements given the diminishing transistor scaling benefits. All of these call for the necessity of accurate aging analysis.”

While new phenomena are being discovered, the old ones continue to get worse. “The drivers of aging, such as temperature and electrical stress, have not really changed,” says André Lange, group manager for quality and reliability at Fraunhofer IIS’ Engineering of Adaptive Systems Division. “However, densely packed active devices with minimum safety margins are required to realize advanced functionality requirements. This makes them more susceptible to reliability issues caused by self-heating and increasing field strengths. Considering advanced packaging techniques with 2.5D and 3D integration, the drivers for reliability issues, especially temperature, will gain importance.”

Contributing factors

The biggest factor is heat. “Higher speeds tends to produce higher temperatures and temperature is the biggest killer,” says Rita Horner, senior product marketing manager for 3D-IC at Synopsys. “Temperature exacerbates electron migration. The expected life can exponentially change from a tiny delta in temperature.”

This became a much bigger concern with finFETs. “In a planar CMOS process, heat can escape through the bulk of the device into the substrate fairly easily,” says Cadence’s Schaldenbrand. “But when you stand the transistor on its side and wrap it in a blanket, which is effectively what the gate oxide and gate acts like, the channel experiences greater temperature rise, so the stress that a device is experiencing increases significantly.”

An increasing amount of electronics are finding themselves being deployed in hostile environments. “Semiconductor chips that operate in extreme conditions, such as automotive (150° C) or high elevation (data servers in Mexico City) have the highest risk of reliability and aging related constraints,” says Milind Weling, senior vice president of programs and operations at Intermolecular. “2.5D and 3D designs could see additional mechanical stress on the underlying silicon chips, and this could induce additional mechanical stress aging.”

Devices’ attributes get progressively worse. “Over time, the threshold voltage of a device degrades, which means that it takes more time to turn the device on,” says Haran Thanikasalam, senior applications engineer for AMS at Synopsys. “One reason for this is negative bias instability. But as devices scale down, voltage scaling has been slower than geometry scaling. Today, we are reaching the limits of physics. Devices are operating somewhere around 0.6 to 0.7 volts at 3nm, compared to 1.2V at 40nm or 28nm. Because of this, the electric fields have increased. Large electrical field over a very tiny device area can cause severe breakdown.”

This is new. “The way we capture this phenomenon is something called time-dependent dielectric breakdown (TTDB),” says Schaldenbrand. “You’re looking at how that field density causes devices to break down, and making sure the devices are not experiencing too much field density.”

The other primary cause of aging is electromigration (EM). “If you perform reliability simulation, like EM or IR drop simulation, not only do the devices degrade but you also have electromigration happening on the interconnects,” adds Thanikasalam. “You have to consider not only the devices, but also the interconnects between the devices.”

Analog and digital

When it comes to aging, digital is a subset of analog. “In digital, you’re most worried about drive, because that changes the rise and fall delays,” says Schaldenbrand. “That covers a variety of sins. But analog is a lot more subtle and gain is something you worry about. Just knowing that Vt changed by this much isn’t going to tell you how much your gain will degrade. That’s only one part of the equation.”

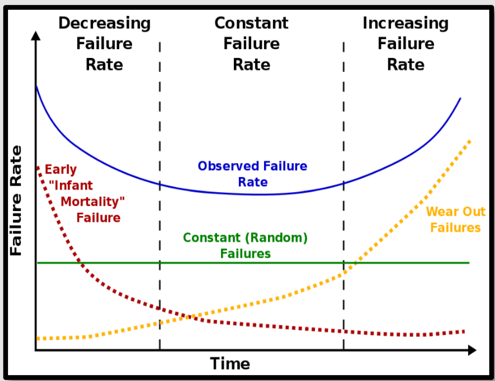

Fig 1: Failure of analog components over time. Source: Synopsys

Aging can be masked in digital. “Depending on the application, a system may just degrade, or it may fail from the same amount of aging,” says Mentor’s Ramadan. “For example, microprocessor degradation may lead to lower performance, necessitating a slowdown, but not necessary failures. In mission-critical AI applications, such as ADAS, a sensor degradation may directly lead to AI failures and hence system failure.”

That simpler notion of degradation for digital often can be hidden. “A lot of this is captured at the cell characterization level,” adds Schaldenbrand. “So the system designer doesn’t worry about it much. If he runs the right libraries, the problem is covered for him.”

Duty cycle

In order to get an accurate picture for aging, you have to consider activity in the design, but often this is not in the expected manner. “Negative bias temperatures stability (NBTS) is affecting some devices,” says Synopsys’ Horner. “But the devices do not have to be actively running. Aging can be happening while the device is shut off.”

In the past the analysis was done without simulation. “You can only get a certain amount of reliability data from doing static, vector independent analysis,” says Synopsys’ Thanikasalam. “This analysis does not care about the stimuli you give to your system. It takes a broader look and identifies where the problems are happening without simulating the design. But that is proving to be a very inaccurate way of doing things, especially at smaller nodes, because everything is activity-dependent.”

That can be troublesome for IP blocks. “The problem is that if somebody is doing their own chip, their own software in their own device, they have all the information they need to know, down to the transistor level, what that duty cycle is,” says Kurt Shuler, vice president of marketing at Arteris IP. “But if you are creating a chip that other people will create software for, or if you’re providing a whole SDK and they’re modifying it, then you don’t really know. Those chip vendors have to provide to their customers some means to do that analysis.”

For some parts of the design, duty cycles can be estimated. “You never want to find a block level problem at the system level,” says Schaldenbrand. “People can do the analysis at the block level, and it’s fairly inexpensive to do there. For an analog block, such as an ADC or a SerDes or a PLL, you have a good idea of what its operation is going to be within the system. You know what kind of stresses it will experience. That is not true for a large digital design, where you might have several operating modes. That will change digital activity a lot.”

This is the fundamental reason why it has turned into a user issue. “It puts the onus on the user to make sure that you pick stimulus that will activate the parts of the design that you think are going to be more vulnerable to aging and electromigration, and you have to do that yourself,” says Thanikasalam. “This has created a big warning sign among the end users because the foundries won’t be able to provide you with stimulus. They have no clue what your design does.”

Monitoring and testing

The industry approaches are changing at multiple levels. “To properly assess aging in a chip, manufacturers have relied on a function called burn-in testing, where the wafer is cooked to artificially age it, after which it can be tested for reliability,” says Syed Alam, global semiconductor lead for Accenture. “Heat is the primary factor for aging in chips, with usage a close second, especially for flash as there are only so many re-writes available on a drive.”

And this is still a technique that many rely on. “AEC-Q100, an important standard for automotive electronics, contains multiple tests that do not reveal true reliability information,” says Fraunhofer’s Lange. “For example, in high-temperature operating life (HTOL) testing, 3×77 devices have to be stressed for 1000 hours with functional tests before and after stress. Even when all devices pass, you cannot tell whether they will fail after 1001 hours or whether they will last 10X longer. This information can only be obtained by extended testing or simulations.”

An emerging alternative is to build aging sensors into the chip. “There are sensors, which usually contain a timing loop, and they will warn you when it takes longer for the electrons to go around a loop,” says Arteris IP’s Shuler. “There is also a concept called canary cells, where these are meant to die prematurely compared to a standard transistor. This can tell you that aging is impacting the chip. What you are trying to do is to get predictive information that the chip is going to die. In some cases, they are taking the information from those sensors, getting that off chip, throwing it into big database and running AI algorithms to try to do predictive work.”

Market segments such as data centers and automotive demand extended reliability. “Traditional methods to enhance reliability are now being complemented by new techniques which leverage in chip monitoring in mission mode to measure ageing and capture other key information such temperature and supply profiles throughout the die’s life,” says Richard McPartland, technical marketing manager for Moortec. “These can be used for predictive and adaptive maintenance to either swap out parts in a planned timely manner or adapt supply voltages to maintain performance. Analytics enablement will make critical information gathered in mission mode from in chip monitors available to the wider system and enable insights gained from the individual device and larger population to optimize and extend reliability.”

Additional 3D issues

Many of the same problems exist in 2D, 2.5D and 3D designs, except that thermal issues may become more amplified with some architectures. But there also may be a whole bunch of new issues that are not yet fully understood. “When you’re stacking devices on top of each other, you have to back-grind them to thin them,” says Horner. “The stresses on the thinner die could be a concern, and that needs to be understood and studied and addressed in terms of the analysis. In addition, various types of the silicon age differently. You’re talking about a heterogeneous environment where you are potentially stacking DRAM, which tends to be more of a specific technology — or CPUs and GPUs, which may utilize different technology process nodes. You may have different types of TSVs or bumps that have been used in this particular silicon. How do they interact with each other?”

Those interfaces are a concern. “There is stress on the die, and that changes the device characteristics,” says Schaldenbrand. “But if different dies heat up to different temperatures, then the places where they interface are going to have a lot of mechanical stress. That’s a big problem, and system interconnect is going to be a big challenge going forward.”

Models and analysis

It all starts with the foundries. “The TSMCs and the Samsungs of the world have to start providing that information,” says Shuler. “As you get to 5nm and below, even 7nm, there is a lot of variability in these processes and that makes everything worse.”

“The foundries worried about this because they realized that the devices being subjected to higher electric fields were degrading much faster than before,” says Thanikasalam. “They started using the MOS reliability and analysis solution (MOSRA) that applies to the device aging part of it. Recently, we see that shift towards the end customers who are starting to use the aging models. Some customers will only do a simple run using degraded models so that the simulation accounts for the degradation of the threshold voltage.”

High-volume chips will need much more extensive analysis. “For high-volume production, multi PVT simulations are becoming a useless way of verifying this,” adds Thanikasalam. Everybody has to run Monte Carlo at this level. Monte Carlo simulation with the variation models is the key in 5nm and below.”

More models are needed. “There are more models being created and optimized,” says Horner. “In terms of 3D stacking, we have knowledge of the concern about electromigration, IR, thermal, and power. Those are the key things that are understood and modeled. For the mechanical aspects — even the materials that we put between the layers and their effect in terms of heat, and also the stability structures — while there are models out there, they are not as enhanced because we haven’t seen enough of these yet.”

Schaldenbrand agrees. “We are constantly working on the models and updating them, adding new phenomena as people become aware of them. There’s been a lot of changes required to get ready for the advanced nodes. For the nominal device we can very well described aging, but the interaction between process variation and its effect on reliability is something that’s still a research topic. That is a very challenging subject.”

With finFETs, the entire methodology changed. “The rules have become so complicated that you need to have a tool that can actually interpret the rules, apply the rules, and tell us where there could be problems two, three years down the line,” says Thanikasalam. “FinFETs can be multi threshold devices, so when you have the entire gamut of threshold voltage being used in a single IP, we have so many problems because every single device will go in different direction.”

Conclusion

Still, progress is being made. “Recently, we have seen many foundries, IDMs, fabless and IP companies rushing to find a solution,” says Ramadan. “They cover a wide range of applications and technology processes. Whereas a standard aging model can be helpful as a starting point for new players, further customizations are expected depending on the target application and the technology process. The Compact Modeling Coalition (CMC), under the Silicon Integration Initiative (Si2), currently is working on developing a standard aging model to help the industry. In 2018, the CMC released the first standard Open Model Interface (OMI) that enables aging simulation for different circuit simulators using the unified standard OMI interface.”

That’s an important piece, but there is still a long road ahead. “Standardization activities within the CMC have started to solve some of these issues,” says Lange. “But there is quite a lot of work ahead in terms of model complexity, characterization effort, application scenario, and tool support.”

Related Stories

Circuit Aging Becoming A Critical Consideration

As reliability demands soar in automotive and other safety-related markets, tools vendors are focusing on an area often ignored in the past.

How Chips Age

Are current methodologies sufficient for ensuring that chips will function as expected throughout their expected lifetimes?

Different Ways To Improve Chip Reliability

Push toward zero defects requires more and different kinds of test in new places.

Taming NBTI To Improve Device Reliability

Negative-bias temperature instability can cause an array of problems at advanced nodes and reduced voltages.

The standard for HTOL duration is 1,000 hrs, not 100. 100 hrs duration, even at highest tolerable HTOL stress conditions, is well below a year of equivalent devices hours for the principal aging mechanisms in FinFET silicon. My biggest fear is hot spots (across-die temperature variation), which have been observed in thermal imaging to be up to +35C. FinFET silicon is known to not have wearout margin for hot spots, so I appreciate seeing the emphasis in this article on temperature as a driver for reliability.

Hi David – you are right. In the original contribution, there was some confusion between 100 and 1000 hours. It will be corrected. Thank you.

“high elevation (data servers in Mexico City) have the highest risk of reliability and aging related constraints”

I am wondering how higher elevation lead to reliability issue?