Complexity, scaling and economics are hindering test engineers from finding all of the faults.

By Jeff Dorsch and Ed Sperling

Testing chips is becoming more difficult, more time-consuming, and much more critical—particularly as these chips end up in cars, industrial automation, and a variety of edge devices. Now the question is how to provide enough test coverage to ensure that chips will work as expected without slowing down the manufacturing process or driving up costs.

Balancing these demands is a priority. These seemingly conflicting goals have pushed test, which used to be a predictable and somewhat mundane task, to the forefront of issues facing the semiconductor industry. But unlike in the past, no single piece of equipment will solve these problems. In fact, it will require changes throughout the supply chain, starting with a design-for-test strategy earlier in the design flow and more points at which test will be applied on the manufacturing and packaging side.

There are three major issues that need to be solved:

Each of these factors increase the test time, the complexity of test programs, and the overall cost of test. Industry insiders say that historically the ceiling for test is 2% to 3% of the total chip budget. But budgets have been edging higher due to all of these issues and more.

“There’s just no such thing as low-enough test cost,” said Dale Ohmart, distinguished member of the technical staff at Texas Instruments. “Our absolute toughest test challenges are the quality of the test solutions that we develop. Our biggest problem in customer satisfaction is that the way we thought we were testing chips wasn’t good enough. We missed coverage.”

What chipmakers and their customers really want is a flat test budget with zero faults, particularly in markets such as automotive. And customers have been pushing test equipment companies to fix the problems.

“The cost of test can’t go up,” said Anil Bhalla, vice president of marketing at Astronics Test Systems. “But you also have to test more complexity and more stuff. There are multiple temperature insertions in automotive. And with advanced packaging you have more clock coverage.”

New strategies emerge

Solving these issues requires significant innovation, both in equipment and in methodology.

One piece of the solution is to re-use more test data developed in the lab during manufacturing.

“Companies are starting to adopt this approach for throughput time to characterize parts and create a common architecture for production,” said John Bongaarts, senior product manager for semiconductor test software at National Instruments. “We’ve seen a decrease in correlation issues across power, voltage and temperature (PVT). What’s necessary to make this work is an understanding of deviation in the interface, test methodology and test equipment.”

At the same time, the amount of test data is increasing, which requires looking at test data in context of other data and potentially even other components within a system.

“There is more data because the parts are more complex,” Bongaarts said. “So now you need larger-scale analytics. You need to look at all of the data, not just the individual test program. You need to understand what happens at the wafer level. That has the added benefit of helping you unlock additional yield gains because you can identify issues earlier in the process. Plus, you get a test time reduction. You know that if ‘test A’ fails, ‘test B’ fails.”

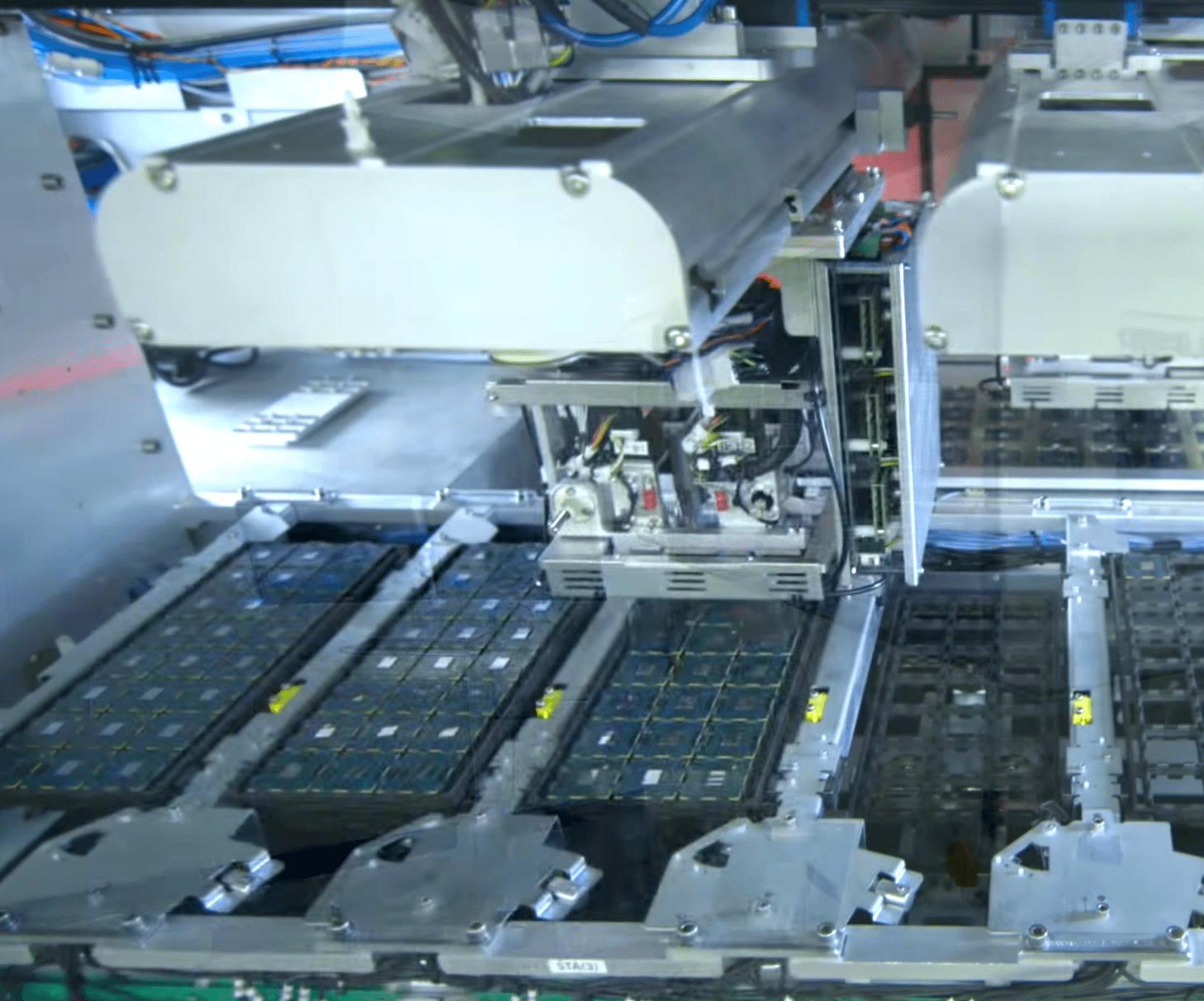

One of the most popular approaches has been to add more parallelism into testing, which involves more tests at the same time. But even that isn’t enough anymore.

“You put a device in a socket and you can do thermal, tri-temperature and functional test,” said Bhalla. “People want system-level test because of the value it adds. But you also have to look at what else needs to change. You need to do more in wafer probe, and you need to do system-level test across the probe. You also can move other tests and scan into system-level test, which basically means you’re doing massively parallel test.”

Parallelism adds more complexity into this process, and massively parallel test takes that up a few notches.

Fig. 1: Massively parallel system-level test. Source: Astronics

Another piece of the puzzle involves design for test. As with verification, the goal across the design flow is to shift key pieces further left to avoid problems later in the design.

“The goal here is to develop a test strategy early so you can optimize coverage, decrease cost and increase throughput,” said Shu Li, business development manager at Advantest. “With DFT you want to try a lot of things in the simulation stage. Not all of those will go to production. So for a typical digital chip, you want to focus on pattern bring-up with patterns for functional tasks. And for RF and mixed signal, you are probably using digital resources to control the device. But a lot of RF and analog testing is still done using instruments like an oscilloscope or a spectral analyzer.”

That makes it harder to move data from the lab to the fab. “You’re increasing the overall test complexity and adding system-level test, which brings stricter requirements for testers and test calibration,” Li said. “The lab and the fab are very different worlds. On the digital side, the challenge is how to motivate designers and DFT engineers to think ahead to testing. On the bench side where there is a lot of test, you need to run more tests. How to correlate all of that to test and production is very challenging.

What goes awry

Results are frequently uneven because the set of skills, knowledge and experience in the test engineers is uneven.

“This requires a lot more than just technology,” said NI’s Bongaarts. “You need to bring characterization and production groups closer together and condense their workflow onto a common platform. This isn’t always so simple, particularly with market consolidation. Even if you achieve all of this inside a company, then the company goes out and acquires another company and you have to start the process over again.”

The growing importance of analog in sensors at the edge complicates the test process, too.

“In the digital world, we know how to do stuck-at fault, but as the technologies have gotten faster and the transistors have gotten smaller, stuck-at faults are not the whole picture anymore,” said TI’s Ohmart. “There are all kinds of transitional faults that happen that are just as upsetting. And we don’t have nearly as good models in the digital world to deal with all that transitional stuff. In the analog world, we don’t have a scheme for understanding how well we are covering all the possible faults those analog circuits can give us. We depend on the knowledge and the skill of individual test development engineers to come up with the schemes to get good test coverage.”

As with most test, the main issue is what’s good enough for a particular application. But as complexity and density levels increase, there are new questions about what was missed and what it costs to make sure that doesn’t happen.

“Stuck-at coverage is just not good enough,” said Matthew Knowles, silicon learning product marketing manager at Mentor, a Siemens Business. “You actually can test within the cell and have various different types of fault models that are based on analog simulations of the actual device itself. On paper that looks great. You get excellent coverage. But the drawback is that it does increase test cost. There’s that balance—how much coverage people want and how much they’re willing to pay for.”

Coming at nearly the end of the manufacturing process, the test industry struggles with what’s absolutely essential to catch and what isn’t.

“It’s a very tall order to pick up all the quality issues—some that are detected by the existing fault models, some that are only found in the system environment—and do it after design is late in delivering everything,” said Dave Armstrong, business development director at Advantest America. “The challenge is to pick up all these pieces at test, which is massive, no question.”

More complexity ahead

In effect, this is adding complexity to complexity.

“There are more complex testers, more complex handling schemes, more complex load boards, more complex software—everything’s more complex,” Ohmart said. Cost is a problem, but it’s not the No. 1 problem. “Our third biggest test problem is cost of test. Right now, I’d say our problems are more related to quality and maintainability than they are to cost.”

Engineers need more time to deal with more with this complexity, too.

“As things become more complex, and the testing becomes incredibly complicated because of the state/space explosion, even in analog circuitry, you need to do something that gives the test people enough time to get the job done,” said George Zafiropoulos, vice president of solutions marketing at National Instruments. “Inherent inefficiencies are in the current system. There’s discontinuities in the flow because of different data formats, because of the lack of common languages, because of the fact that there are different people in different geographical locations that may or may not spend any time with each other. Culturally, there’s an issue.”

But these issues need to be solved in order to take advantage of new markets, such as 5G and AI.

”We all see a hockey stick coming of higher frequencies, higher pin counts, higher probe densities, higher everything,” said Armstrong. “That’s going to blow your cost equation. The biggest challenge is actually, ‘Yeah, we need to understand what we’re doing in a reasonable amount of time, but we also have to have the tools to get the gigahertz per millimeter connected to these devices in a cost-effective fashion.’ To me, the single biggest challenge we’ve got is signal density.”

Advanced packaging, advanced problems

Time is a critical factor, in part because of cost, but also because of the time it takes for chips to get through manufacturing. A slowdown in any of the process can cause delays across the entire supply chain.

“Time-to-market is a very big challenge,” said Amy Leong, FormFactor’s chief marketing officer. “What’s really presenting the new challenges is advanced packaging.”

FormFactor is hardly alone in this view. “We see a lot of customers coming to us, wanting to integrate a lot of different things together in one package,” said Rich Rice, senior vice president of business development at ASE. “That complexity ripples all the way through the entire process, from design, all the way through wafer test, assembly, and final test.”

For probe card companies, the shrinking probe pitch is taking the place of the shrinking transistors. Specifically, the probe pitch in advanced packaging technologies—such as fan-out wafer-level packaging and very-fine-pitch copper pillars—is shrinking faster than it has in the past.

“Over a decade ago, the probe pitch never really changed,” said Leong. “It stayed between 200 microns to 150 microns for a very, very long time. But now, every year, we’re seeing 10 to 20 microns shrink. That almost feels like a front-end geometric shrink for the transistors is repeating itself in the probe. So now, nearly all our probe cards are fabricated using lithography, because that’s the only way for us to keep up with the pace of probe shrink.”

Fig. 2: Identifying probe issues is becoming critical. Contact resistance plot shows bowing in center of probe. Source: Advantest

With advanced packaging, a key concern is known good die. A single defect in a chip can cause multiple chips in a package to fail, magnifying the cost impact of failing to detect faults. Depending on the application, the ripple effect can be minimal or it can be severe.

“With low-cost components, even if it’s a multi-chip, it’s probably not too big of a deal, because you can do really good wafer-sort coverage on some bare die, and you have some pretty well-known, probably good die that you can put in the packages,” said Rice “It typically may be a consumer application, so you don’t sweat it if it’s not a bullet-proof known good die. But when you get into some of the higher-cost applications, like maybe some of these high-end graphics accelerators with high-bandwidth memory around them, one package will have anywhere from $200 to $400 of IC content. With that, you have to be really careful. If you also add all the passive components into it, you could have a few hundred components in one package.”

Conclusion

Test is no longer just a simple step at the end of manufacturing. It has become an integral part of an increasingly complex process, with its own complex set of tradeoffs.

“The semiconductor industry in general has been on a 30- to 40-year journey of quality improvement,” said TI’s Ohmart. “The problem is that the expectation of what you need for quality improvement has accelerated even faster than we managed to improve quality. When I started in 1980, the quality of what we shipped out the door then was awful – thousands of parts per million. And that was acceptable. Now there’s this expectation of essentially zero. There will be zero defects coming out of the semiconductor manufacturers. Everybody has forgotten that almost all quality processes that have been put in place in the world are risk-management processes. They’re statistical, and most of them define a level of acceptable quality events or escapes. Zero is not necessarily an attainable goal. You have to design in quality to zero. What that means is we have to push the quality implications back up into the design. We’ve got to be able to very quickly and more easily know, and be able to sort out, the correctly built devices from the incorrectly built devices. That’s really the issue here. You have to more quickly and easily sort those out than we can today. And that means coming up with design-for-test techniques that help us do that.”

This puts a huge burden on all parts of the supply chain, particularly for safety-critical applications such as automotive electronics, medical devices, and military/aerospace systems. The current standard is zero defects, and test technology and methodologies will have to be adjusted in order to reach that goal.

Related Stories

Who’s Paying For Auto Chip Test?

How To Test IoT Devices

Excellent article. I do agree the part that large scale data analytics and parallelization are still not at play, especially at the low tech nodes. But a better integration between design, test and reliability has to be achieved to keep overall costs lower.